The Challenges of Big Data for BI

Below is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of Traditional BI vs Agile BI. The presenter is Mark Flaherty, CMO at InetSoft.

Mark Flaherty (MF): In terms of social networking people are trying to understand who are the main influencers in social networks in order to market to them. Then they want to know how effective those marketing campaigns are and whether or not those are the right influencers to target those other people in the social network, in other words to cause the ripple behavior across the social network and get more sales.

In terms of sensor data, well I would say most definitely supply chain and distribution channel optimization is the are of focus. Its also relevant from a green computing perspective, and also a cost saving perspective. People are analyzing where money is being spent in terms of energy usage, and where you can save it, and where you can reduce your overall costs.

The bottom line is we are dealing with large volumes of data, and in addition to that, we have got low latency requirements to get that data in order to make quick decisions or present offers in real-time, etc. Large data volumes tend to present problems for traditional kinds of analysis.

Another area is sentiment analytics where people look at external data sources to see what customers are saying about their products and services to see if there is something they need to understand or how many complaints there are, any faults there that regularly occur in a product that maybe get voiced on social sites. Another area of interest for unstructured content is competitive analysis. There is a whole range of applications emerging focused on that kind of data.

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |

Big data presents problems for BI systems that perhaps are not seen with the smaller volumes of data within the enterprise. One is the ability to capture low latency data. For example, a few of the applications I have already mentioned, online advertising, location specific advertising on mobile devices, these are applications that need to look at large volumes of data, but need a very, very low latency. That can be a challenge with the data management system.

Big data also requires data integration tools to connect to the large data sources. For example connectivity to Web logs is probably mainstream these days. But connectivity to online gaming data sources is probably not, and therefore you have got to look to see if data integration vendors can support those requirements.

Data transformation and integration on large data volumes is a challenge. It's all and well good to have a database that can scale up for large data volumes, but what if you can’t get the in there because of a problem that’s caused by data integration, you’ll be prevented from analyzing it.

Your analysis tools can’t afford to do full refresh. Instead they need to be able to detect the changes. Perhaps you need power loading capability in order to be able to get that data in rapidly. And then the question is also where do you put the data in order to be able to analyze it? Should it go into a relational database? Should it go somewhere else, and what happens if you want to maintain history on these kinds of data volumes? Do we have to analyze everything online or can we do some of these large data volume analyses in batch? There is another term that comes up with big data and business intelligence which is Hadoop for BI.

|

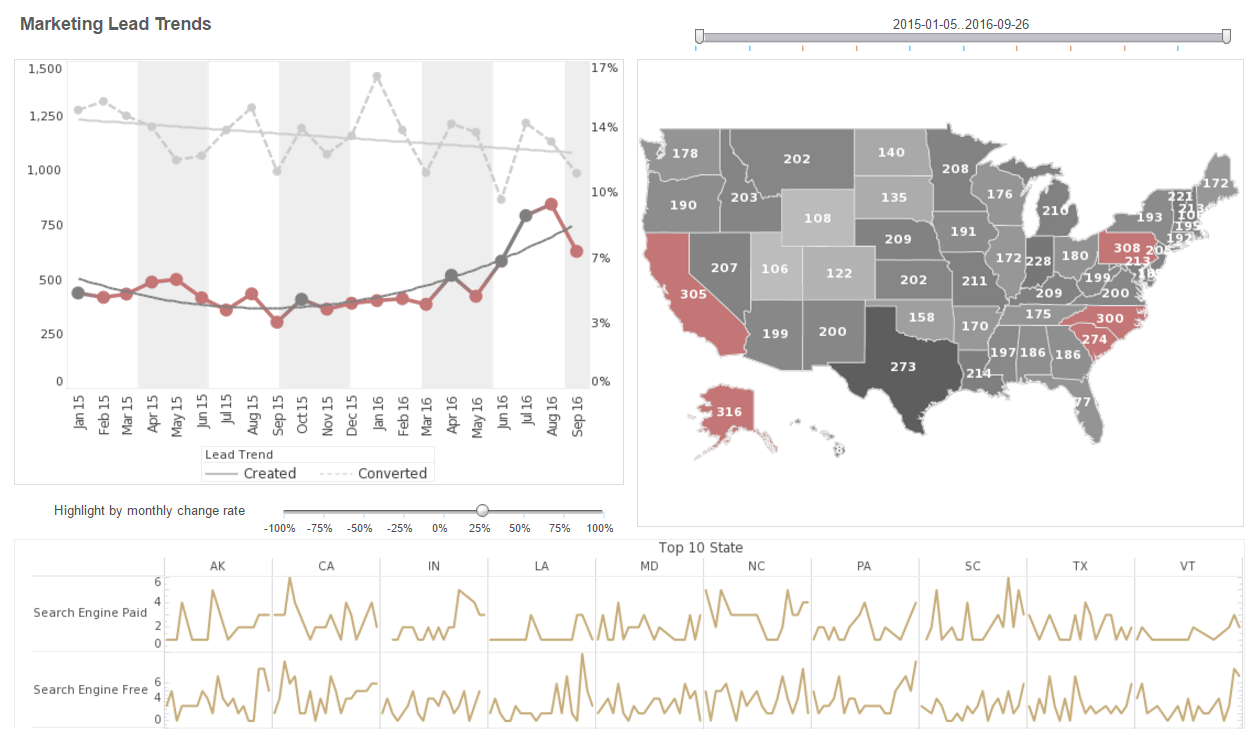

View the gallery of examples of dashboards and visualizations. |

What is meant by Hadoop for BI?

Hadoop is a batch programming framework that can be used in order to scale analytical processing across thousands of machines. And effectively, what you are doing therefore is a kind of batch processing on a large volume of data. But it's also possible to create analytical functions using MapReduce which can process large volumes of data.

Some people think a lot of this technology is useful for absolutely everything, I mean if you really want low latency data, and that’s critical to your business, then this kind of technology is not really geared up for it. If you are looking for a small subset of data out of a very large data set, then again I would look to see, is there a place to store your data. It might be better to reduce the data down to manageable data blocks rather than relying only on access via a relational database.

| Previous: Big Data and the Need for Agile BI |