How Data Virtualization Supports Business Agility

This is the transcript of a Webinar hosted by InetSoft on the topic of "Agile BI: How Data Virtualization and Data Mashup Help" The speaker is Mark Flaherty, CMO at InetSoft.

Today, enterprise architectures need agility to support business initiatives, and data virtualization can play a key role in this. In this webinar we will provide insights on how data virtualization provides information agility to your enterprise architecture project to deliver business value and significant competitive event.

The focus of this webinar is on how to think about different information delivery strategies to help enterprise architecture as a whole be very business focused. So while we will get somewhat technical to address some of the key challenges, the idea is really to provide the framework primarily, and we will talk about some of the other ways.

Today what we will talk about is what is the evolution of this whole area of business and enterprise architecture. Obviously those are joined at the hip. There are different perspectives on this, and they are changing. Based on this context we want to think of what is the role of information in this coming together of business and enterprise architecture.

Think of it as the new oil that lubricates or flows through both of them connecting the two of them in meaningful ways. Data virtualization has a very important role there to play. We will briefly look at what is data virtualization and then quickly move on to the role that it plays in the enterprise architecture.

Focus On Some Data Virtualization Examples

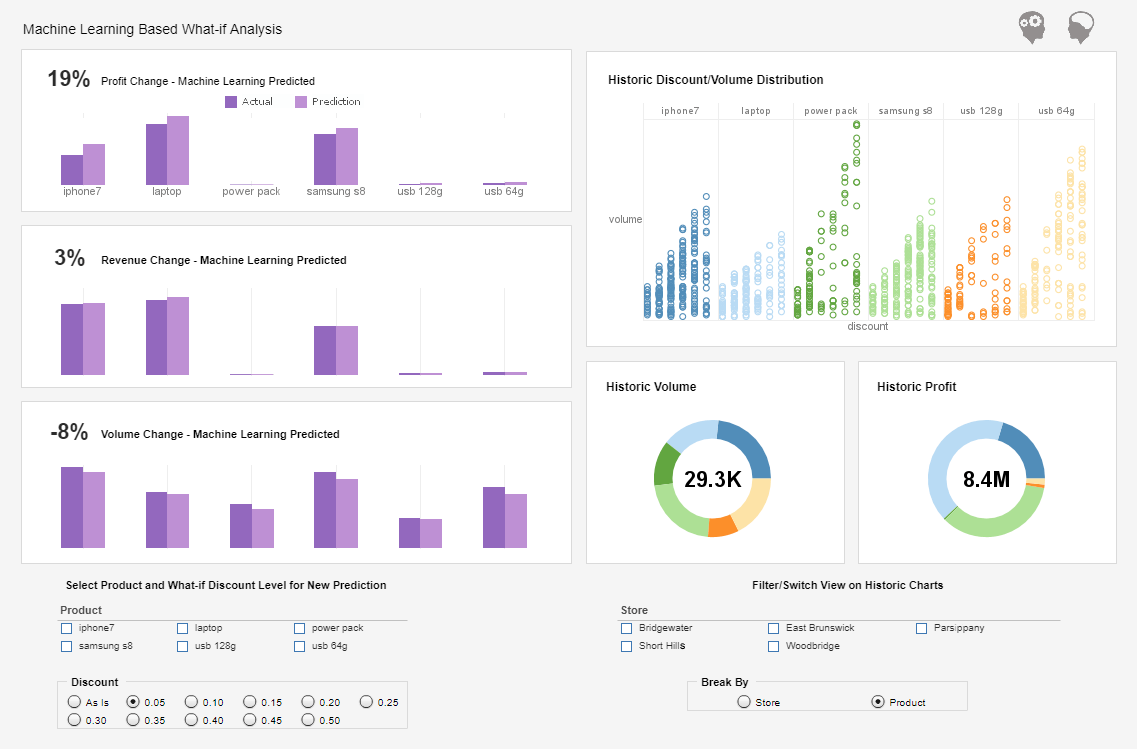

The best way I thought to do that would be to actually focus on some examples and use cases, and in the process also emphasize how it interacts with other enterprise and information management tools. We will look at informational applications like business intelligence and analytics and MDM and things like that, and transactional applications, that might be using a SOA suite or something like that. We will look at that, and then there will be a place for us to address these typical challenges for “what about” types of questions, and that will be partly addressed upfront, but you will also have the opportunity to ask us more such questions. So with that set let me move forward.

The goal overall of data virtualization is agile business, and we really need to put business at the center of this whole discussion. You know we are not really interested in building pretty architecture pictures here. The goal is to really enable a fast incremental business value driven evolution of the organization and so we ask what role therefore does enterprise architecture and information management play in that? How can we make that iterative agility as much as possible self-service enabled by the business people. You want pervasive business intelligence at the edges of the organization, not all centralized.

The idea is virtualized access to information as a service, and we will explore what that means architecturally, but the idea is you want to be able to deliver the right information to the right person or persons at the right time and not make that very complex or difficult to do. In this context, enterprise architecture is evolving more away from just being a set of boxes that represent well here is my IT or infrastructure architecture, here is my messaging architecture, here is my information architecture, my business process architecture, and application and solution architecture.

All of those are still very important. But I think one of the main changes that we are seeing in enterprise architecture is it’s evolving and aligning itself more to business capabilities, and those business capabilities are not necessarily division or function specific, but they could be combinations of functions. So customer facing capabilities, manufacturing capabilities, supply chain and partnership capabilities, innovation capabilities in the product area are all considered. The idea is to think about what the business really needs, what business capabilities are needed. Then align the right technologies to support that.

Is Data Virtualization Still Popular?

Yes, data virtualization remains a relevant and popular approach in modern data management, though its adoption has evolved with the rise of cloud computing, data lakes, and real-time analytics platforms. Data virtualization allows organizations to access and query data from multiple sources without physically moving or duplicating it, creating a unified logical view. This approach reduces the complexity and cost associated with traditional ETL (Extract, Transform, Load) processes, while accelerating analytics and decision-making.

Organizations continue to rely on data virtualization for several reasons. First, it provides a faster way to deliver integrated data to business intelligence and reporting tools, enabling real-time or near-real-time insights without waiting for batch processing. Second, it allows data teams to maintain security, governance, and compliance centrally, since the physical data remains in its source systems. Third, data virtualization supports hybrid and multi-cloud environments, making it easier to query distributed data across on-premises systems, cloud warehouses, and SaaS applications without complex data replication.

While newer trends like data mesh, streaming data pipelines, and self-service analytics platforms are gaining traction, data virtualization still complements these architectures. Many organizations use it to provide a unified access layer over heterogeneous sources, accelerate data exploration, and reduce IT overhead. Its popularity persists especially in enterprises that need agility in analytics, centralized governance, and minimal disruption to existing data infrastructures. In short, while the landscape of data integration continues to evolve, data virtualization remains a valuable tool for connecting and leveraging diverse data sources efficiently.