InetSoft Webinar: The Many Dimensions to Big Data Analytics

This is the continuation of the transcript of a webinar hosted by InetSoft on the topic of "Taking the Pragmatic Approach to Big Data Analytics." The speaker is Jessica Little, Marketing Manager at InetSoft.

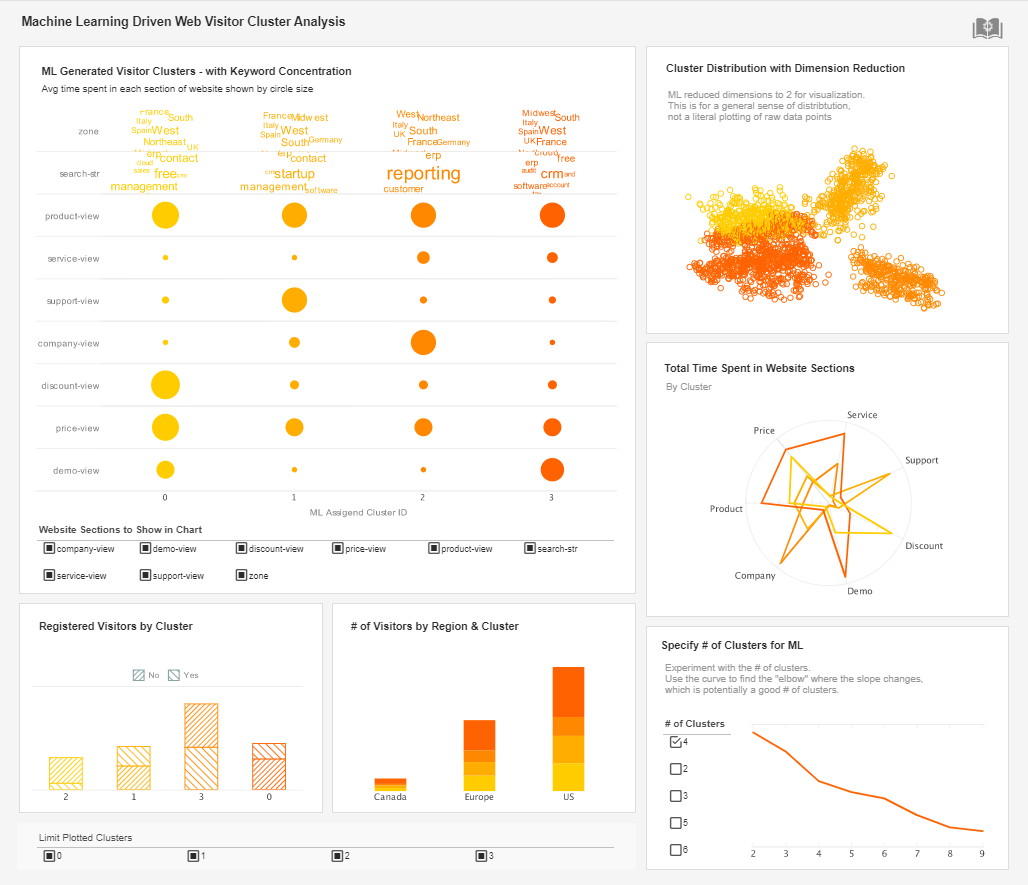

There are many dimensions to big data analytics: a scalable architecture, allowed storage, and advanced analytic capabilities. Another one that I read in the report was across industries the business case was really strongly focused on customer centric objectives.

What they saw across all of the industry groups, and they have 12 macro industry groups from 26 major industries, is that for all of them the business case is really being driven for those organizations. They looked at organizations across the spectrum of Big Data activity. For those organizations that are already piloting or implementing Big Data initiatives, almost half of them, 49% are driven by customer centric outcomes.

They want to get to know the customers better. They want to improve the customer experience. They want to understand how to serve those customers better, and I think that that connects very much to this digitalization of things, and customers today have very different expectations when they interact with organizations, and they have much greater ability to express themselves positively or negatively about their experiences with those companies.

Another of the key findings was about how are organizations getting started. I read something about they were beginning to pilot implementations using existing technology and sources of data. I think is that was one of the surprising findings was that they saw organizations starting their Big Data activities with internal data which is already was within their systems. This is compared to where a lot of a hype has been, around social media and other external data and bringing in that external data.

Pragmatic Approach to Big Data

What they found is that organizations are taking a very pragmatic approach to Big Data. They’re bringing in an infrastructure that can handle the volume of data, as I said that’s much more accessible to folks now and the variety of data types. In the past it was very structured data, but they have data like e-mails from clients or logs from a call center that for many of them, they haven’t been able to process in the past because of the constraints in technology and processing.

And what they saw is organizations taking a very pragmatic approach by bringing in the capabilities to deal with this untapped data that they already have within their organizations. They are beginning to understand how to use this new tools and technologies there, but they’re building those base capabilities with internal data that they already have because they know that there’s a lot of untapped insights already buried in what they already have.

It’s a much more pragmatic way because they started with what they’re more familiar with while they’re learning the new tools, and they step forward from there. That makes a lot of sense. For any BI project, we often talk about that same pragmatic approach: start small. Start with what you know. Gain some experience and expertise, and then begin to expand as you gain familiarity and comfort level with it.

I think that pragmatism goes into one of the other key findings, as well. When they looked at how organizations are moving through this Big Data progression, this Big Data adoption, what they saw is that almost 50% of the market place, 47% are in a stage where they are focused on developing a very clear strategy about what business challenges they want to tackle with Big Data. What kinds of technologies would that require them to bring in or expand in their current infrastructure?

What kinds of skills would that require them to either expand with, or build upon the skills that they already have or start to introduce?

Pilot Stage of Our Big Data Project

It’s a very pragmatic approach that says we’re going to start with a strategy. We’re going to have a blueprint. We’re going to understand how we move through this, and then they move into the pilot stage of our Big Data project. During the pilot stage there is that focus on making sure that they can measure and understand those requirements.

In the past you tended to see organizations skipping through the pilot stage, and they would go from their plans and get the things implemented and roll them out directly. We don’t see that as much in Big Data because it is a new frontier. So people are taking a very pragmatic approach understanding what’s going to be required of them and what returns that they can expect and then rolling it into implementation.

The pilot stage should start with a razor-sharp definition of success: pick one or two business questions the pilot must answer and translate those into measurable outcomes (e.g., reduce late shipments by 15%, surface top 3 root causes of quality defects within 48 hours, or produce a \$X/month forecast with Y% accuracy). Scope the pilot narrowly—limit the number of data sources, the time window, and the user group—so you can move fast and prove value rather than getting lost in plumbing. In my experience, pilots that try to “do everything” become living proof that integration is hard; the smarter play is to do one meaningful thing end-to-end and make the rest optional for later phases.

Next, design the architecture and team around speed and repeatability: choose a minimal stack that supports ingestion, transformation, storage, and visualization with good observability, and assign clear owners for data, platform, and product. Identify the canonical data sources up front, map the data quality issues you expect, and budget time for simple but effective cleansing and lineage documentation—teams underestimate this cost. Staffing should mix domain experts, a data engineer, a data analyst, and a product owner who can translate executive needs; short weekly checkpoints with a fast feedback loop to users are essential to keep the pilot honest and aligned.

Finally, define governance, risks, and an exit strategy before the first byte is loaded. Agree on privacy and access rules, version the schemas and transformations, and pick 3–5 success metrics (technical and business) that will be reviewed at the pilot close. Plan for knowledge transfer: create reproducible runbooks, a short ops playbook for handoff, and a prioritized backlog of features for scaling. If the pilot proves the hypothesis, you’ll want to iterate quickly—if it fails, you should be able to extract the lessons without stranded resources—both outcomes are valuable, but only if the pilot was designed to teach you something concrete.