Geospatial Analysis Taking Off

This is the transcript of a podcast recorded by InetSoft on the topic of "Geospatial Analysis Taking Off". The speaker is Jessica Little, Marketing Manager at InetSoft.

Geospatial analysis has taken a little while to get off the ground. It has taken off in recent years. What are the trends that are leading to this interest? There are a few non-technical reasons. Everything has got to be somewhere. Information technology is catching up.

We also know that Toffler’s first law states that everything is related, but nearby things are. So if you look at the fact that physical things have to have a location, and things are related, they are simply more related when they are close by.

That’s really quite important from a number of business reasons. Inside the business process how we deal with these relationships, these flows of materials or information from point to point, that’s what geospatial technology is for.

Inside a high-performance database there is a set of standards-compliant capabilities for geospatial data. It is an enterprise-grade implementation such that it fits into everything else an enterprise does. It is a problem when a lot of implementations of geospatial capabilities sit outside of the main enterprise process, almost like they are the side.

The problem is when you want to do some location-based analysis, you have to move that application out into this GIS environment, do your analysis, and then move it all back. The better approach is to have the geocoding in the primary database. This way the analysis is much quicker. Another advantage is that is free, in terms of being a feature of the database, rather than having to purchase another standalone GIS application.

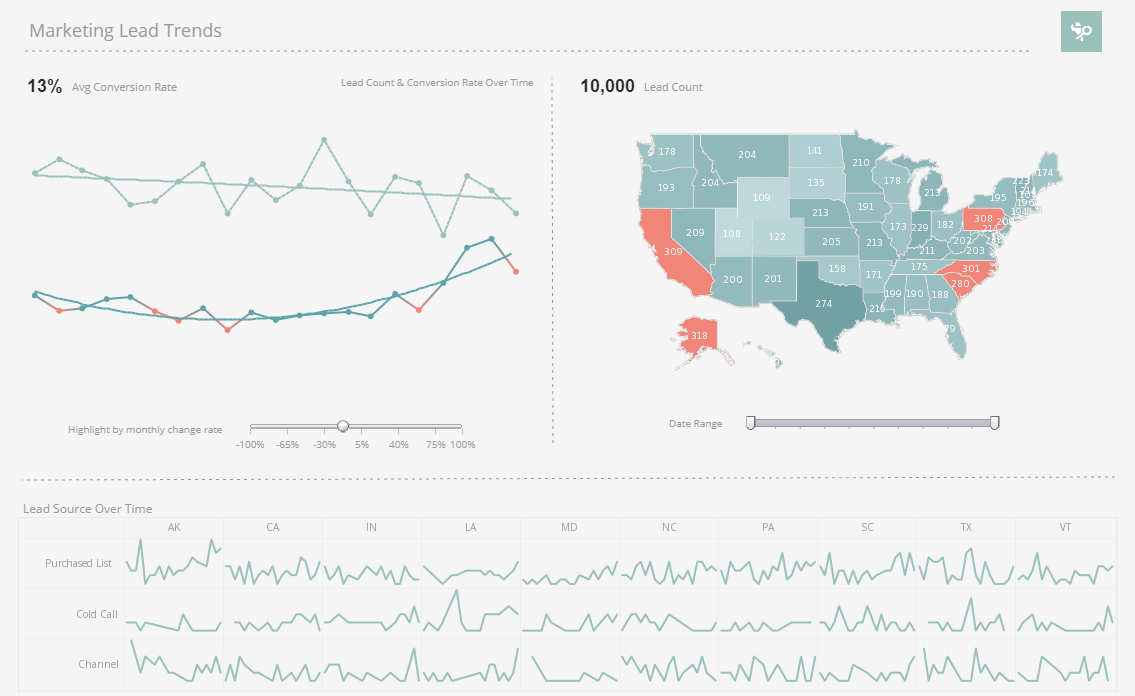

The indexing, all of the things that you need to make geospatial analysis, are inside the database. It’s just a feature set. That has led to some really interesting implementations. Let’s talk about some of them. Working in a geospatial world, we might not be used to these massive applications, but recently we were working with a consumer products company who has taken all of their customer data, millions of customers. They’ve assembled the customer histories. They’ve organized the information about the customer on location.

It was a relatively simple process. It only took them 2 or 3 weeks to do that. They were able to do what’s called geocoding. They had the customer address, and they used a third-party solution to assign latitude and longitude to that. So that allowed them to do clustering, and look at groups of customers.

They can look at things like economic factors or cultural factors that would influence how their products are perceived. They could make that information available to every executive, every regional manager, and every salesperson in the organization on their cell phone. They can go to a store and talk to that retailer. The system automatically finds where they are and tells them which retailer to go to talk to. Then it pulls all the information about that customer on the iPad or the cell phone or whatever.

Geospatial is moving now into tracking individual movements via cell phones. You know who I am, where I am going. If you walk by a store, maybe they can offer you something on your phone. Those kind of location-based applications are happening. The movement in IT to mobile platforms is hugely important. The point is to make sense of the data that comes from mobile devices, you need to know where they are. And you need to know it instantly. You can’t wait a minute. People are moving past a location quickly.

Location is part of the context. It does need to be real-time. It does need to be standardized because each device can have a different interface and they have different ways of dealing with information inside the device. The information has to be standardized so it can handled by various other applications, so it can be routed appropriately. All of these conditions exist now.

Another customer, a household name, that is in the parcel delivery business. They have sensors in all of their vehicles. They have sensors in the handheld devices. They are pulling all of that information together into a data warehouse and analyzing it in near real-time to optimize operations and logistics. They even let consumers go to their Web site and enter their delivery address and see the real-time status of delivery.

How Parcel Delivery Companies Optimize Driving Routes

Parcel delivery companies rely heavily on route optimization to ensure packages reach customers quickly and cost-effectively. The foundation of this optimization is advanced routing software that evaluates multiple factors, including delivery addresses, road conditions, traffic congestion, and driver schedules. Instead of simply mapping the shortest distance, these tools analyze the entire network of stops to create the most efficient path. The result is fewer miles driven, less fuel consumed, and faster deliveries without compromising accuracy.

Data plays a central role in refining delivery routes. Companies use real-time traffic feeds, GPS data from their fleets, and even weather reports to adjust routes dynamically throughout the day. For example, if an accident causes a highway closure, the system can instantly reroute drivers to avoid delays. Historical data also feeds into the optimization process, helping companies identify patterns such as neighborhoods with frequent drop-offs, high congestion areas, or seasonal surges in demand. This predictive capability allows for smarter planning ahead of time.

Another major factor in route optimization is the sequence of deliveries. Software often applies algorithms like the Traveling Salesman Problem (TSP) or more advanced heuristics to determine the best order in which to visit stops. Priority packages, time-sensitive deliveries, and customer preferences—such as specific delivery windows—are integrated into the calculations. By sequencing deliveries intelligently, companies can meet customer expectations while still minimizing detours and unnecessary mileage.

Technology like telematics and machine learning further enhances optimization. Telematics systems collect data on driver behavior, vehicle performance, and stop duration, which can reveal inefficiencies such as excessive idling or long dwell times. Machine learning models use this data to improve future routing by predicting where bottlenecks or delays might occur. Over time, these systems continuously refine their recommendations, creating a feedback loop that makes routes more efficient with each delivery cycle.

Finally, human factors remain important in the optimization process. While software generates the optimal routes, experienced drivers often provide feedback that fine-tunes these plans. For instance, a driver might know that a particular street is difficult to navigate with a large delivery truck, or that certain customers are consistently unavailable at specific times. By combining automated optimization with driver insights, parcel delivery companies achieve the best balance between technological efficiency and practical, on-the-ground knowledge. This hybrid approach ensures reliability, speed, and cost savings while maintaining customer satisfaction.