Managing Lead Scoring Models Over Time

This is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of "Best Practices in Data Mining." The speaker is Mark Flaherty, CMO at InetSoft.

Moderator: One to two-day training is a lot better than six-week training. Talk about models and scoring models and managing different models over time. A lot of these data mining applications can be set up such that the scoring is done automatically.

In other words when you apply this algorithm for “next best offer” that sales increase 2.1%, for example, whereas that other model, sales actually decreased to 0.2% or 0.7% or something like that. I am sure some of that stuff can be automated, but what are some of the other best practices around scoring models?

Flaherty: Definitely, I think for scoring of models, we have seen lot of automation and handholding with IT. How can I automate that critical step and avoid any kind of manual kind of intervention?

You are try to reduce the revalidation efforts. I think one critical best practice in terms of database scoring is for where you are trying to take the scoring algorithm and calculate the score of new set of data. The other best practice is how can continuously monitor the performance of your predictive model.

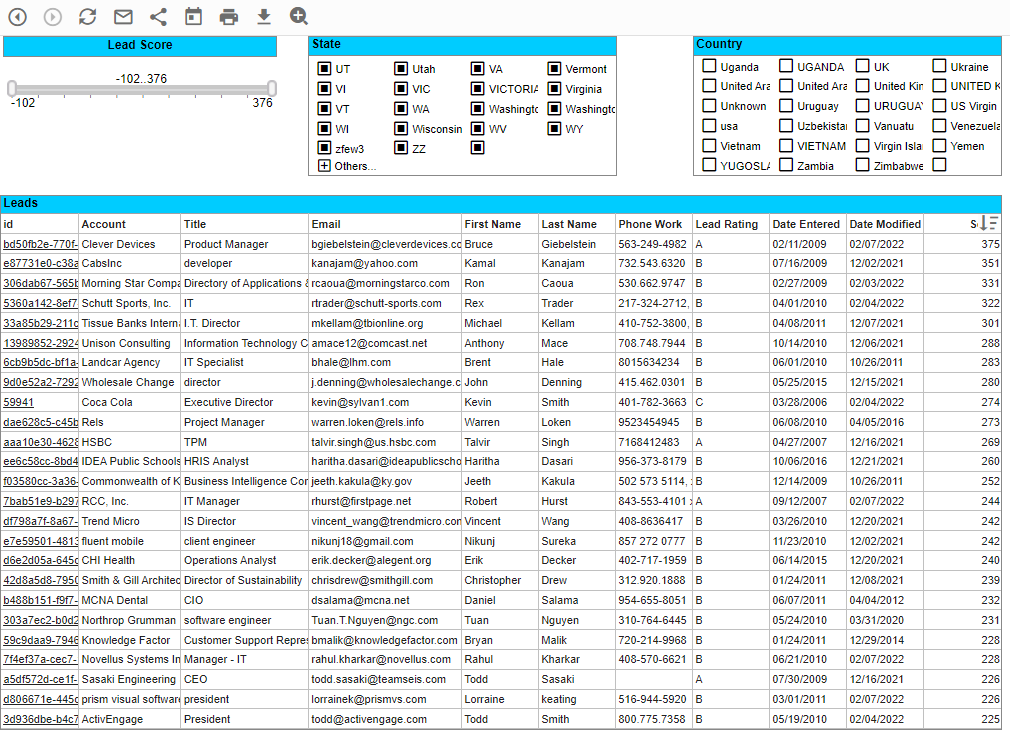

How Can I Monitor the Performance of a Lead Scoring Model?

If my model is not performing well, how will I know? How can I monitor that performance and bring that model back into the production cycle. And as part of that issue, then there is the question of how do I do that. Some of that performance monitoring, which include charts or reports or alerts, is being integrated with scoring in a management framework of analytical models. So that’s an important consideration. How can I avoid risk in terms of model degradation and then continue to guarantee the usefulness and accuracy of the model? Combining the scoring techniques along with the performance monitoring is an important best practice.

Moderator: What about best practices for data mining in terms of building flexibility into your campaigns and into your program for data mining? It’s just amazing how fast you can learn things and how fast you can retool what you are doing. You can refocus your efforts. You get instantaneous feedback. It’s not like the old days when you would send out your direct mail piece and six weeks later maybe you have got enough data to analyze a campaign. What is some advice you can give to companies to help them build flexibility into their programs?

Flaherty: It’s a good question I think this is a really exciting topic. The most important thing has been the deployment of a model and getting the right apparatus in place to that deployment. So the way that I look at it, first there is the real-time aspect of your predictive models running there, providing value to your business. They are allowing you to monitor how things are going at all times.

At the same time you need to provide flexibility. One of the key changes in the market recently in terms of best practices is to look at your data sources that are now out there. Ask what insight they can give you for your business. And so you have a triangulation going on. You have got the social media sphere. You know that’s a different kind of data. You don’t know necessarily who is seeing those comments and tweets, but you know the types of things that are being said and the trends.

Number one is the real-time monitoring aspect of data mining models’ performance. Next is having the tool setup to automate alternating among models. Then comes the idea to be open and consider all types of data sources that can add value to your analysis. Don’t ignore the huge changes going on in how people buy things today and how they communicate with one another.

Lead scoring models are living tools that require ongoing care to remain accurate and valuable. Over time changes in market conditions, product offerings, buyer behavior, and data quality will degrade static models. A disciplined program of monitoring, validation, and iteration keeps scores aligned with current reality and ensures sales and marketing focus on the highest‑value prospects.

Define Clear Goals and Success Metrics

Start by linking your lead scoring model to measurable business outcomes such as conversion rate, win rate, sales cycle length, and average deal value. Establish baseline metrics and expected improvements. Use these outcomes to prioritize model updates and to decide whether changes lead to tangible business impact rather than cosmetic shifts in scores.

Regular Performance Monitoring

Schedule recurring reviews of model performance using both operational and outcome indicators. Track distribution of scores, lead-to-opportunity conversion by score band, and calibration drift where identical scores produce different outcomes over time. Monitor feature stability to identify predictors that lose or gain predictive power.

Data Quality and Governance

Ensure the underlying data feeding the model remains reliable. Implement automated checks for completeness, duplicates, stale records, and anomalous values. Maintain clear ownership for data sources and transformations so issues can be traced and corrected quickly. Document data definitions and update them when business logic changes.

Retrain and Recalibrate Regularly

Retrain models on recent data at a cadence appropriate for your business velocity—monthly or quarterly for fast-moving markets, less often for stable environments. Recalibrate score thresholds to preserve the intended meaning of high, medium, and low scores. When using machine learning, validate that retrained models generalize and don’t overfit short-term noise.

Versioning and Change Management

Adopt explicit version control for scoring rules and model artifacts. Keep a changelog documenting why changes were made, who approved them, and expected impacts. Use A/B tests or shadow deployments to compare new versions against current production without disrupting operations, and roll back quickly when necessary.

Human-in-the-Loop Oversight

Combine automated scoring with human judgment. Gather qualitative feedback from sales and customer success on lead quality and why certain leads were misclassified. Use that feedback to refine feature engineering, add business rules, or adjust weighting of behavioral signals.

Segmented and Contextual Models

One-size-fits-all scoring often fails as your customer base diversifies. Consider separate models or adjusted thresholds for different verticals, geographies, account sizes, or product lines. Maintain cross-segment comparability by documenting differences and normalizing scores when needed for portfolio-level decisions.

Transparency and Explainability

Provide clear explanations for score components and how final scores are produced. Share a concise dashboard or one-pager that shows top predictive features and typical paths that produce high scores. Transparent models build trust and speed adoption by sales teams.

Continuous Learning and Governance

Institutionalize a cadence for model review, stakeholder communication, and post‑deployment evaluation. Train teams on interpreting scores and embed success metrics into regular business reviews. Treat lead scoring as a strategic capability that evolves with your business rather than a one-off project.

Effective management of lead scoring models combines measurement, data stewardship, iteration, and human feedback. With versioning, segmentation, and governance, lead scores remain accurate and actionable, enabling sales and marketing to prioritize efforts that drive real revenue.