InetSoft Webinar: Talking About Big Data

This is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of "Best Practices in Data Mining." The speaker is Mark Flaherty, CMO at InetSoft.

Flaherty: And in that context, what we have seen more and more of our customers coming to us, talking about big data for example, where we have large volumes of data, or we have different types of data or coming in at different speeds. So I think some of our more mature customers are also focusing on what are the best practices around sampling when it comes to big data.

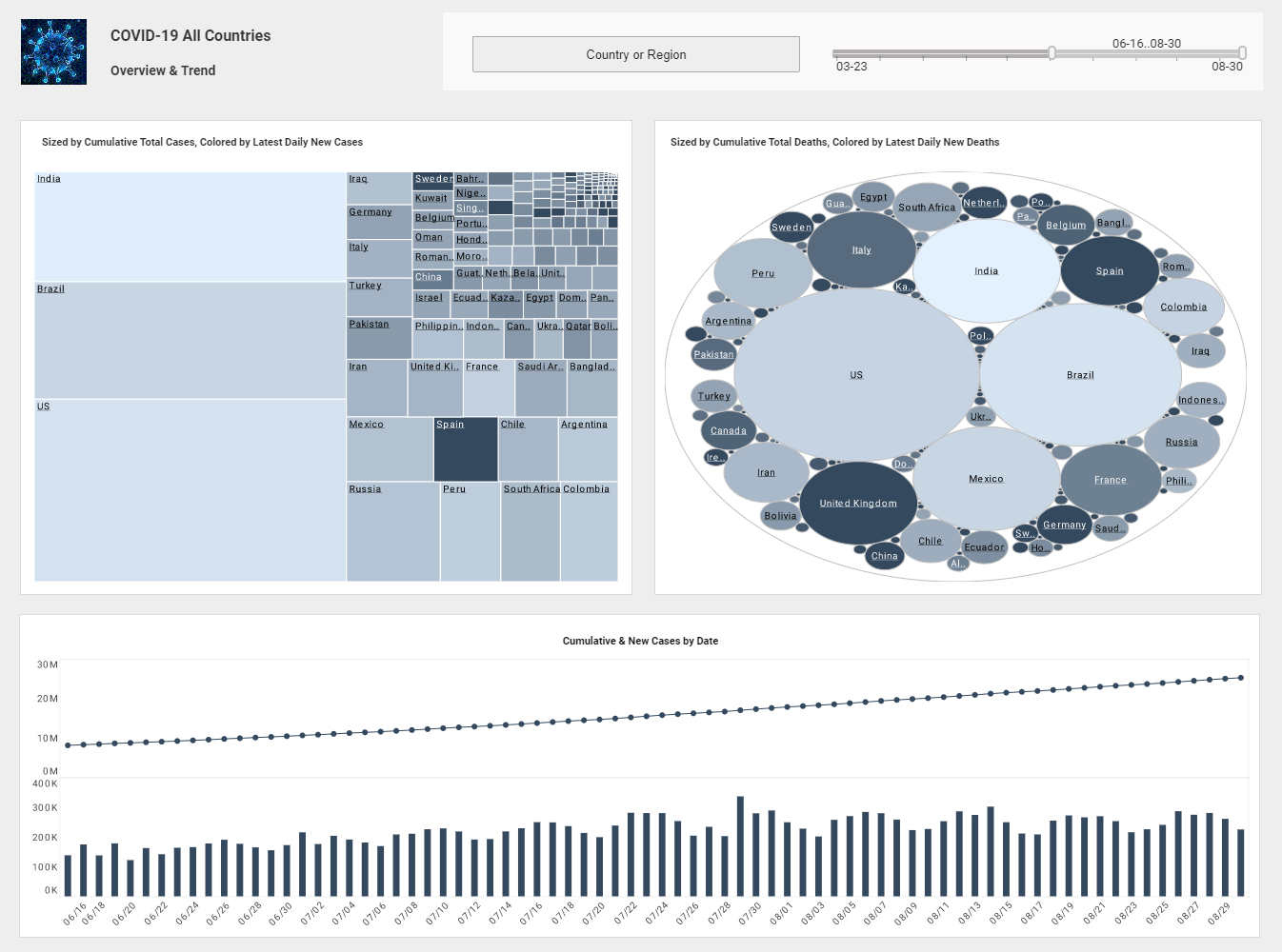

When it comes to data visualization, what are some of best methods to use? When it comes to transformations, there are question such as how do we handle missing values? That’s from the data preparation process, and a lot of our customers are looking into some of the best practices on that end.

Moderator: When you talk about sampling, I am presuming you are talking about taking a small subset of your data and creating some algorithms using the subset. Obviously if you are trying to develop an algorithm based on a megabyte of data, it's going to run a lot of faster than if you try to do that on a terabyte of data. When you do sampling, what’s a good percentage of the total? Is there a best practice there?

Flaherty: I think we have seen ranges from like 2% to 6% or 7%, but I think definitely when we are trying to predict something which is rare, such as fraud. You will need to pull a percentage from the higher end of this range from a sampling perspective. When the customers are asking, can I model on a complete set of data, is there even a possibility, will I be able to yield create a better model, if I have a well-defined sample versus building a model on a complete set of data?

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |