InetSoft Webinar: How the Analytics Software Facilitates the Discovery Process

This is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of "Best Practices in Data Mining" The speaker is Mark Flaherty, CMO at InetSoft.

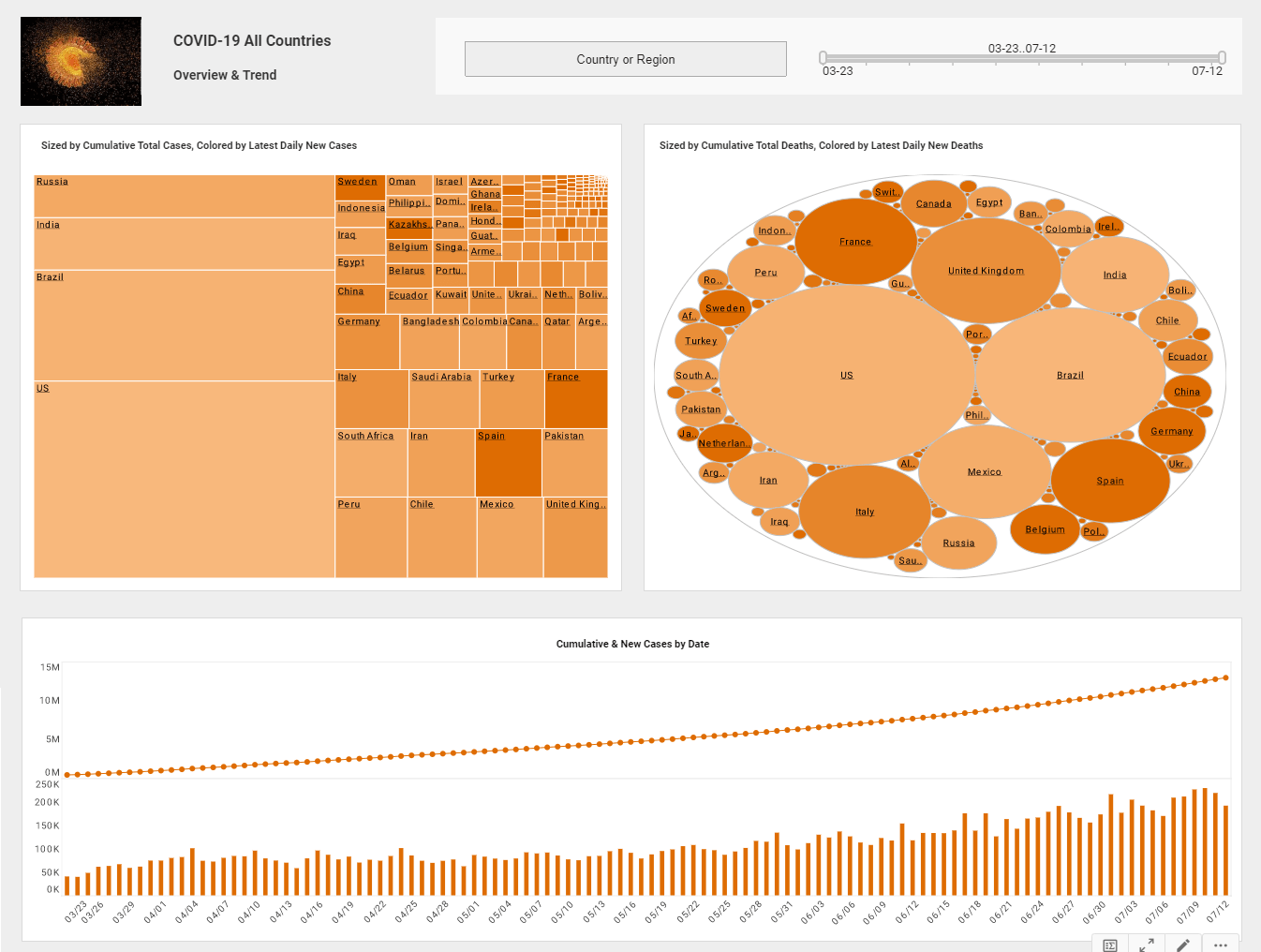

Moderator: Could you talk about how the analytics software facilitates the discovery process? You say using visual analysis software, you can facilitate that process, and I understand that, for example, you will connect a data set to an application that you purchase, and you bring in certain columns or certain fields of course and then basically can you run a preview then apply different algorithms and kind of get different visualizations? Do you look for the spikes in the trend lines, or the red areas or the green areas of a heat map?

Flaherty: I think the way things used to be done with someone with tremendous experience and education in this area is he would start by doing all that work himself. I think as the visual analysis tools have gotten better, we can either bring in different types of people with less experience or make that expert much more productive. And one of the ways that we can do that is to not start off with a whole bunch of manual data transformations that could take the next two months putting together. Let the analytics software automate the data transformations.

A lot of the visualization tools now have the capability to do some level of automated data transformations to get that started. So you know with the typical example would be there are always dates in a file. You can't use a date to predict anything, but you can use a time interval. So a lot of the software will automatically do that conversion.

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |

You will run some automated data prep. Then you will tell it, run some automated models for me, and it will run a whole slew of models and give you the comparative results. It lets you look into each one. It lets you look at exactly the modeling steps that were done. It lets you edit them, and so you are much more productive. You are very quickly looking at a whole bunch of various scenarios that are not the end of the process, but have greatly facilitated the beginning of the process. They get you up to speed so you can see where you need to focus, when a lot of the same variables are being selected. What kinds of transformations could you then do to make those variables more powerful? If you have a hunch that one of the ones that isn’t being selected is important, what could you do there, so that it would be detected and bought into the mix?

Then, of course, there is the capability once deployed to do a lot of things like running champion and challenger tests. Remember that multiple models need to be run on regular basis, and advise the people operating the system when a new one should be promoted. When one has gone out of accuracy perhaps or a new one looks like it's catching on to trends a little bit faster, you might want to make that decision.

So a lot of the focus I think really is the better data mining tools allow us a better process that can get you going much faster, really leverage the power of that expert and get him going faster and let the software do some of the work. I really think that’s been a huge improvement of late.

Moderator: When you begin an engagement with a customer who is new to data mining, what are two or three key best practices or basics, that you share with them to make sure they get off on the right foot?

Flaherty: From a new customer kind of perspective, a new to data mining kind of perspective, always a lot of the discussion focuses around the data and the types of analytical methods. But I think you have to take a big step back and ask what kind of decisions you are trying to make. And of course, end users will never be able to predict that every scenario or opportunity or risk, but I think I think understanding that decision making process and how can the analytic professionals or statisticians or data miners work closely with IT.

Read what InetSoft customers and partners have said about their selection of Style Scope for their solution for dashboard reporting. |

IT plays one of the roles here from a data preparation perspective. Now how can they come together to jointly arrive at decision? What are the key decisions they need to make and what kind of methods are applicable and what kind of data do they need? Different types of decisions require different types of data. So I think the second point we always come to is around the data preparation. Data needs to be transposed or aggregated or transformed in a way that we come to a meaningful set of derived variables. A lot of our discussion does focus on the data preparation. It’s where they spend 70%-80% of their time.

| Previous: Analytic Software Vendors Have Improved Interfaces |

Next: Talking About Big Data

|