Improving Business Performance

This is the continuation of the transcript of DM Radio’s program titled “What You See Is What You ‘Get’ – How Data Visualization Conveys Insight,”.

Dale Skeen: That’s what you really want to discover. You know you can look at the bits and bytes going around network. That tells you how well your network devices are performing. But it doesn't tell you how well your business is performing.

Exposing that gap is what operational intelligence is about. Being able to fill that gap typically requires visualizing the right information, at the right time. And it has to do a lot with contextual information, which is not something we’ve talked a lot about so far.

Eric Kavanagh: Yeah. That's a good point. I guess the big question in my mind is how do you figure out first of all what to show and then how to show it?

Obviously it depends on the industry but maybe let's go with that electric company you were talking about before. I mean, yeah, we’re just talking about piecing together a dashboard and making sure to link various dependencies.

Business Performance Thresholds

In this case it would be certain thresholds that you don't want to go below or above. Obviously, in the summer time in Texas this would be heat waves that put a great strain on the grid. You know being able to kind of ascertain when a critical moment is coming obviously.

That’s sort of a low hanging fruit, I suppose. But in terms of other used cases or business value, what are some of the contextual data that you are able to bring in or help them find? Or what are they able to find on their own to complete the picture?

Dale Skeen: Well, the first contextual data is having a business transaction out of process context. Again, remember that the business transactions are running over multiple systems so that was not easy to do.

We looked at log files and the data left behind by these systems that they run. You can run process discovery tools against the raw data itself to see where all these transactions tend to flow. So that's one part of the information you need to bring in.

Performance Oriented Data

Next, you want to bring in performance oriented data that is more at a business level. Once you have the things on business process context, you can measure, for example, how long it stays and what's your first step.

So you bring the performance level information to that. Lay on top of that your business objectives. I have an SLA, for example, that will take no more than 10 minutes disruption to change a customer phone right into another. Or that service order can be conceded within three minutes or three days depending on what type of service order that is.

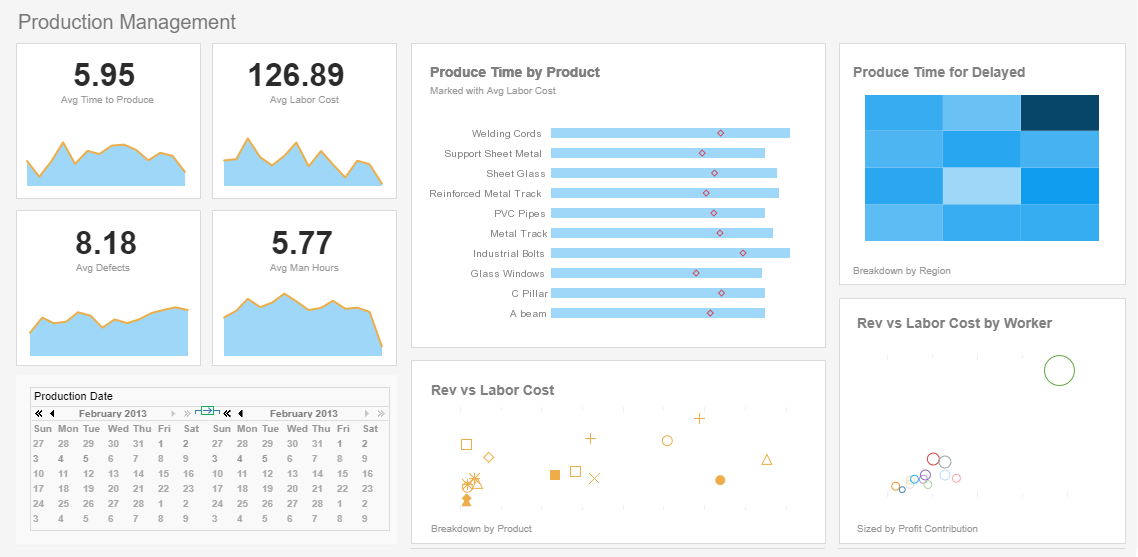

You lay these types of goal oriented business objectives on top of that. What you have are these dashboards. They start to become rich, fully layered, and of course for a particular user you have different roles of users that you mentioned. Some of them you may want to emphasize one layer or another.

One may just be looking at the performance objective themselves. One may just be looking at outliers when the process sort of went off the rails if you will and you can detect that as well.

Steps for a Successful Business Intelligence (BI) Trial

Implementing a Business Intelligence (BI) trial is a critical step for organizations looking to evaluate BI tools and their potential to drive data-driven decision-making. A well-executed trial helps assess whether a BI solution aligns with business objectives, integrates with existing systems, and meets user needs. Below is a step-by-step guide to conducting a successful BI trial, designed to ensure clarity, stakeholder engagement, and measurable outcomes.

1. Define Objectives and Success Criteria

Start by clearly defining the goals of the BI trial. Identify specific business challenges you aim to address, such as improving operational efficiency, enhancing customer insights, or optimizing marketing ROI. Engage stakeholders, including department heads and C-suite executives, to align the trial’s objectives with organizational goals. Establish Key Performance Indicators (KPIs) to measure success, such as time saved in report generation, user adoption rates, or accuracy of insights. For example, you might aim to reduce report creation time by 20% or achieve 80% user satisfaction. Document these objectives to guide the trial and ensure all participants understand the expected outcomes.

2. Assess Current Data Infrastructure

Evaluate your existing data environment to understand what data is available, how it’s stored, and any limitations, such as data silos or inconsistent formats. Identify internal data sources (e.g., CRM, ERP, or financial systems) and external sources (e.g., APIs or third-party providers) relevant to your objectives. Assess data quality and accessibility to determine if cleansing or integration is needed before the trial. This step ensures the BI tool can work with your data ecosystem and highlights any preparatory work required, such as setting up a data warehouse or ETL (Extract, Transform, Load) processes.

3. Select the Right BI Tool

Choose a BI tool that fits your organization’s needs based on functionality, scalability, ease of use, and integration capabilities. Popular options include Microsoft Power BI, Tableau, Qlik Sense, StyleBI or Alteryx, each offering free trials or demo versions. Consider tools with features like interactive data visualization, AI-driven insights, and self-service analytics to empower business users. Ensure the tool integrates with your existing systems, such as Microsoft Excel or cloud platforms, and supports your data security and compliance requirements. Review customer case studies and trial offers to shortlist tools that align with your industry and company size.

4. Assemble a BI Trial Team

Form a diverse trial team that includes representatives from IT, analytics, and business units (e.g., sales, marketing, finance). Appoint a project lead, such as a Chief Data Officer or BI sponsor, to oversee the trial and ensure alignment with strategic goals. The team should include data analysts to handle technical setup, business users to test usability, and stakeholders to provide feedback. Define clear roles and responsibilities to streamline execution. If necessary, provide basic training to ensure team members can effectively use the BI tool during the trial.

5. Scope the Trial and Select Use Cases

Limit the trial’s scope to focus on specific use cases that align with your objectives. For example, you might test the tool’s ability to create real-time sales dashboards or analyze customer behavior trends. Select one or two departments, such as marketing or finance, to keep the trial manageable. Choose use cases with clearly defined metrics, such as tracking campaign performance or forecasting inventory needs. A focused scope allows you to demonstrate value quickly and avoid overwhelming users with a broad rollout.

6. Prepare Data for the Trial

Ensure your data is ready for analysis by performing data cleansing, transformation, and integration. Use ETL processes to combine data from multiple sources into a centralized repository, such as a data warehouse, for consistent and reliable results. Validate data accuracy to prevent misleading insights during the trial. If the BI tool supports automated data preparation, leverage these features to streamline the process. This step is critical to ensure the tool can generate meaningful visualizations and reports.

7. Set Up the BI Tool

Install and configure the BI tool according to your trial requirements. This may involve setting up cloud or on-premises deployment, connecting to data sources, and configuring user access permissions. Use the vendor’s trial resources, such as guided tutorials or support teams, to ensure proper setup. For example, many BI tools offer free trials with step-by-step guidance for connecting data sources and building dashboards. Test the tool’s integration with existing systems to confirm compatibility and performance.

8. Conduct the Trial and Build Dashboards

Roll out the BI tool to the trial team and begin testing the selected use cases. Encourage users to create interactive dashboards, reports, and visualizations using the tool’s drag-and-drop features or AI-driven analytics. For instance, users can build a sales performance dashboard to track KPIs like revenue growth or customer acquisition rates. Monitor user interactions to assess ease of use and functionality. Collect real-time feedback from participants to identify strengths, weaknesses, and any technical issues, such as slow query times or data access problems.

9. Monitor and Evaluate Performance

Track the trial’s progress against the defined KPIs and success criteria. Evaluate metrics such as report generation speed, user adoption, and the quality of insights generated. Conduct regular check-ins with the trial team to discuss challenges and gather qualitative feedback. For example, ask business users if the tool’s visualizations helped them uncover actionable insights. Use the trial data to assess whether the BI tool meets your objectives and integrates seamlessly with your workflows. Document any issues, such as data inaccuracies or usability barriers, for further refinement.

10. Gather Feedback and Plan Next Steps

At the trial’s conclusion, collect comprehensive feedback from all participants through surveys, interviews, or focus groups. Assess whether the tool delivered the expected benefits, such as improved decision-making or reduced reporting time. Review feedback to identify areas for improvement, such as additional training needs or data integration enhancements. Based on the trial’s outcomes, decide whether to adopt the BI tool, explore other options, or adjust the scope for a larger rollout. Develop a roadmap for full implementation, including timelines, resource requirements, and scalability plans.