Keep an Eye on the Future with Forecasting Tools and Reports

In today's business environment, uncertainty has become the norm. The best course of action cannot be found in historical reports; simply reacting to past and current conditions is not sufficient to run a business. Organizations need to make use of forecasting tools and reports to gain insight on potential future outcomes from already existing operations.

Meet all of Your Organization's BI Needs With a Single Solution

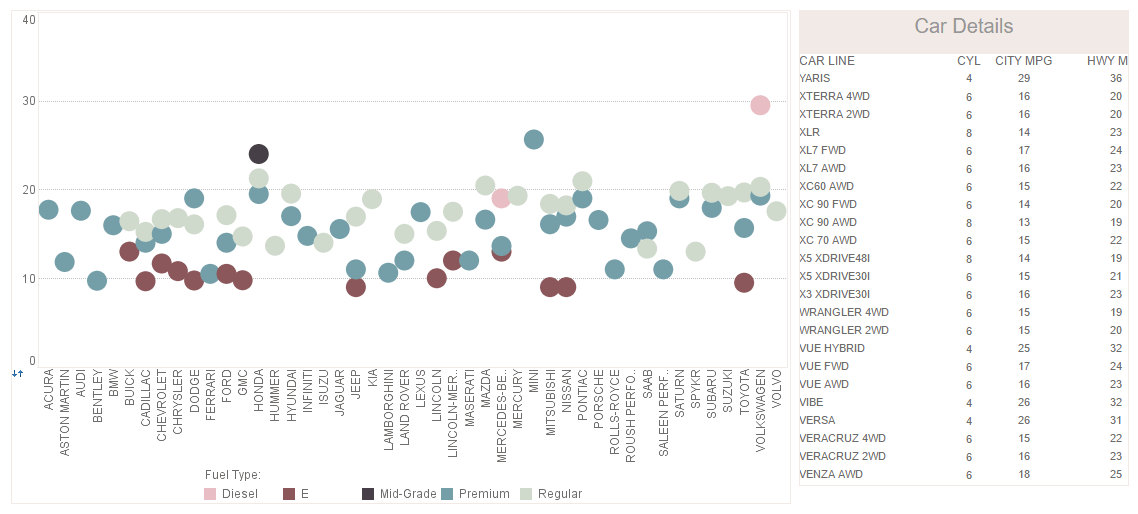

With InetSoft, you won't need to get budgetary approval to invest in a separate solution for forecasting tools and reports. StyleBI is a complete BI solution which includes advanced forecasting tools along with sophisticated reporting and dashboarding technology.

Self Service | Designed for Business Users

Put forecasting tools and reports in the hands of every employee.

Analytics software is often designed to be used by specialists. StyleBI has a fast learning curve that enables it to be learned and used by just about anyone. Making analytics pervasive in your organization ensures that more employees are anticipating conditions and performing analyses based on their own unique perspective.

Robust | Sophisticated Data Mashup

Combine data from many disparate sources for a complete picture of possible future outcomes.

StyleBI is a web-based BI solution that can simultaneously mashup data from many different sources, including datamarts, data warehouses, Google Adwords and Analytics, relational databases, salesforce.com, and many more. There will be no need to waste time and resources creating separate reports for each data source. StyleBI integrates them all into a single, web-based solution that can be accessed anywhere, from desktop browsers to phones and tablets.

How a Feldspar Miner Switched from Adobe Analytics to InetSoft’s Serverless Analytics Microservice

Feldspar mining is not a digital-native business. Production supervisors track blast-to-mill cycles, maintenance leads watch vibration signatures on crushers, and logistics planners obsess over haul-truck queue times at the pit and delays at the railhead. Yet the company website and marketing funnels still generated the lion’s share of analytics spend—until an operations-led initiative forced a rethink. This article details how a mid-sized feldspar producer transitioned from Adobe Analytics to InetSoft’s serverless analytics microservice, why the architecture fit mining realities better than a web-analytics suite, and what the team realized in licensing, resource, overhead, and support savings—along with a measurable bump in management and end-user satisfaction.

Background: Great at Web Funnels, Misaligned for the Mine

The miner originally adopted Adobe Analytics to instrument corporate sites, dealer portals, and a handful of customer apps. Over time, operations tried to extend that footprint: pulling in plant historian exports, GPS logs from haul trucks, and ERP order cycle times. The disconnect was architectural as much as functional. Adobe’s model excels at event streams from web and mobile touchpoints; the mine needed to mash up SCADA tags, IoT telemetry, CMMS work orders, and rail logistics—and deliver role-based dashboards to crews with spotty connectivity.

The governance pattern was also awkward for the shop floor. Operations analysts wanted embedded dashboards inside existing internal apps, per-crew views on tablets, and fine-grained row-level security tied to shift and site. Building these experiences around a marketing analytics core created friction, duplicated extracts, and a growing backlog for the central web analytics team.

Why InetSoft’s Serverless Analytics Microservice

The pivot point came when the IT architecture group evaluated a containerized, serverless-style analytics microservice from InetSoft. The premise was straightforward: package dashboards, data mashups, and report rendering into stateless services that auto-scale, expose clean APIs, and embed anywhere. For mining, that translated to:

- Data mashup without heavy ETL: Combine historian CSV drops, MQTT IoT feeds, ERP tables, and dispatch logs into a unified semantic layer without committing to a monolithic data warehouse upfront.

- Elastic, event-driven runtime: Analytics workloads spiked at shift changes and end-of-day settlements; the microservice scaled out on demand and idled gracefully during the graveyard lull.

- Edge-friendly deployment: Lightweight containers could run at the plant or in the cloud, synchronizing securely and tolerating intermittent connectivity.

- Embed-first delivery: Dashboards and pixel-perfect reports embedded into the company’s maintenance portal and dealer extranet via web components and single sign-on.

Architecture in Practice

The final topology used a Kubernetes cluster in the company’s existing cloud VPC with a small on-prem node at the processing plant. The InetSoft analytics microservice ran as autoscaled pods behind an API gateway. Data ingress followed a pragmatic ELT pattern:

- Streaming: MQTT bridges forwarded crusher temperature and vibration metrics; GPS pings for haul trucks arrived as compact JSON batches.

- Batch: Nightly dumps from the historian (OPC-UA derived) landed in object storage; ERP (orders, shipments, inventory) replicated via CDC into a cloud database.

- Mashup layer: InetSoft’s pipeline joined these sources—no heavy transformation outside of key normalization, deduping asset IDs, and consistent timestamps.

- Security: SSO with role-based access mapped to HR groups; row-level filters enforced “site-only” views for supervisors.

Presentation used three patterns: real-time tiles for pit operations (cycle time, queue length, payload variance), daily scorecards for plant OEE, and executive rollups for cost-per-ton, on-time shipment, and maintenance backlog.

Licensing and Cost Realignment

Cost modeling was the first surprise. Adobe Analytics was licensed on an enterprise contract anchored to digital properties and monthly hit volumes. The operations data didn’t fit cleanly into that model, and adding users for non-marketing teams increased the footprint. By contrast, InetSoft’s serverless microservice aligned with IT’s container and API paradigm.

- Licensing: The team moved from a multi-year web analytics agreement to a subscription for the microservice plus a modest charge for additional author seats. Annual outlay fell by an estimated 58–65% because they no longer paid for marketing-oriented features they weren’t using in the mine.

- Compute: Autoscaling kept idle capacity near zero during off-peak hours. Compared to the previous fixed workloads (VMs and external processing for extracts), average monthly compute and storage spend dropped ~42%.

- Embedding: No extra per-embed fees or per-view charges; crews accessed dashboards as part of the existing maintenance and dispatch apps, simplifying budgeting and adoption.

Resource and Overhead Savings

Before the switch, the analytics backlog was split across the web team and a small group of operations analysts who wrangled CSV exports and one-off scripts. InetSoft’s microservice allowed IT to standardize a few patterns and eliminate toil:

- Authoring efficiency: Reusable templates for KPIs (OEE, MTBF/MTTR, queue time, cost-per-ton) reduced build time for new dashboards by ~55%.

- Data pipeline simplicity: Visual mashups replaced brittle nightly ETL jobs. The count of cron-based data movers fell from 27 to 9, cutting failure points by two-thirds.

- Ops overhead: Because services were stateless and versioned as containers, upgrades became routine rolling deployments. Planned downtime for analytics updates dropped from hours to minutes.

Support Burden and Reliability

Support tickets used to cluster around three issues: stale data after failed extracts, access problems for contractors, and report rendering timeouts. Post-migration metrics over the first two quarters showed:

- Support tickets: A 38% reduction in analytics-related tickets, with the biggest improvement in “data didn’t refresh” incidents.

- Performance: P95 dashboard load times fell from 6.8 seconds to 3.1 seconds for executive views; near-real-time pit ops tiles updated every 60 seconds without manual refresh.

- Access control: SSO groups synchronized hourly; contractors got time-boxed access linked to their work orders, reducing manual account cleanup.

End-User and Management Satisfaction

The most telling indicator wasn’t technical—it was behavioral. Supervisors began opening their shift dashboards before safety briefings; the maintenance planner printed a daily exception list for assets with rising vibration and suboptimal lube temperatures; executives stopped asking for emailed spreadsheets. An internal pulse survey (n≈140 across operations, maintenance, logistics, and sales ops) reported:

- Overall satisfaction: 4.4/5, up from 3.1/5.

- Perceived usefulness: 4.6/5 for operations roles; 4.2/5 for logistics and customer service.

- Time to insight: 62% of respondents said they could answer “most” daily questions without requesting a new report.

Management’s endorsement followed the numbers. Cost-per-ton is the north star; correlating payload variance, queueing, and idle time surfaced a dispatch tweak that shaved 1.7 minutes from average pit cycle time. While many factors move cost-per-ton, leadership attributed part of a 3–4% improvement in the quarter to better visibility and faster course corrections.

What Changed for IT

From IT’s perspective, two shifts mattered most. First, analytics moved from a monolithic, marketing-centric platform to a modular service stack that looked like everything else in their cloud: containers, declarative infra, observability baked in. Second, ownership of domain logic migrated to the teams closest to the work. The data mashup layer and semantic definitions lived alongside crew playbooks; IT focused on platform reliability, security, and enabling patterns instead of building one-off reports.

Observability improved as well. The microservice exported metrics into the company’s standard monitoring setup. Anomalous spikes in query latency triggered autoscale rules or flagged a bad upstream dataset. The feedback loop between SRE and analysts tightened, and “performance” stopped being a mysterious dashboard property and became a graph IT could reason about.

Migrating Without a Rewrite

Success hinged on a pragmatic migration path. Rather than a big-bang cutover, the team followed three waves over 90 days:

- Wave 1 — Executive and Finance: Recreated top-level KPIs (cost-per-ton, throughput, on-time shipment, inventory turns) using the new semantic layer. Early wins secured sponsorship.

- Wave 2 — Plant and Maintenance: Built OEE tiles and condition-based maintenance views; embedded dashboards inside the CMMS, so techs didn’t switch apps.

- Wave 3 — Logistics and Customer Experience: Linked rail schedules, demurrage fees, and customer order windows; gave dealers a self-service portal for order status and ETAs.

Critically, historical trend lines were preserved by backfilling summaries into the new store, so users didn’t lose context. At the same time, the team retired brittle extracts and replaced them with streaming or CDC sources as they came up for maintenance.

Security and Governance Fit for Industrial Data

Mining data isn’t just sensitive—it’s context-heavy. The InetSoft microservice’s row-level policies ensured that supervisors saw only their pit or plant. For contractors, time-boxed roles granted access to asset views relevant to work orders. Audit logs captured every dashboard render and filter state, satisfying internal controls without rote screenshots. Governance meetings shifted from “who can see what” to “is the semantic definition still right for how we run the plant.”

Quantifying the Business Case

After two quarters, the IT finance and operations excellence teams summarized impacts (all figures annualized estimates):

- Licensing: 62% reduction versus prior analytics spend by aligning licensing to actual user patterns and dropping unused web-marketing features.

- Compute & storage: 48% savings through autoscaling and object-storage tiering of historical summaries.

- Support & overhead: 35–40% fewer analytics tickets; upgrade windows shrank from scheduled downtime to rolling updates.

- Time-to-dashboard: Median lead time for a new analytics view fell from 4–6 weeks to 5–7 days using reusable templates and the mashup layer.

- Operational impact: 3–4% cost-per-ton improvement partly attributed to better visibility and quicker countermeasures; maintenance backlog reduced 11% with early exception detection.

What the Team Would Do Again

The miner’s post-mortem emphasized three practices worth repeating:

- Start with embed: Put analytics where the work happens. Embedding inside CMMS and dispatch apps drove adoption without training marathons.

- Treat the semantic layer as code: Version control KPI definitions; review changes like application code so “cost-per-ton” means the same thing everywhere.

- Bias toward streaming and CDC: Replace nightly extracts opportunistically; it lowers staleness and simplifies failure recovery.