Data Grid Cache Map-Reduce for Business Intelligence

Data Grid Cache

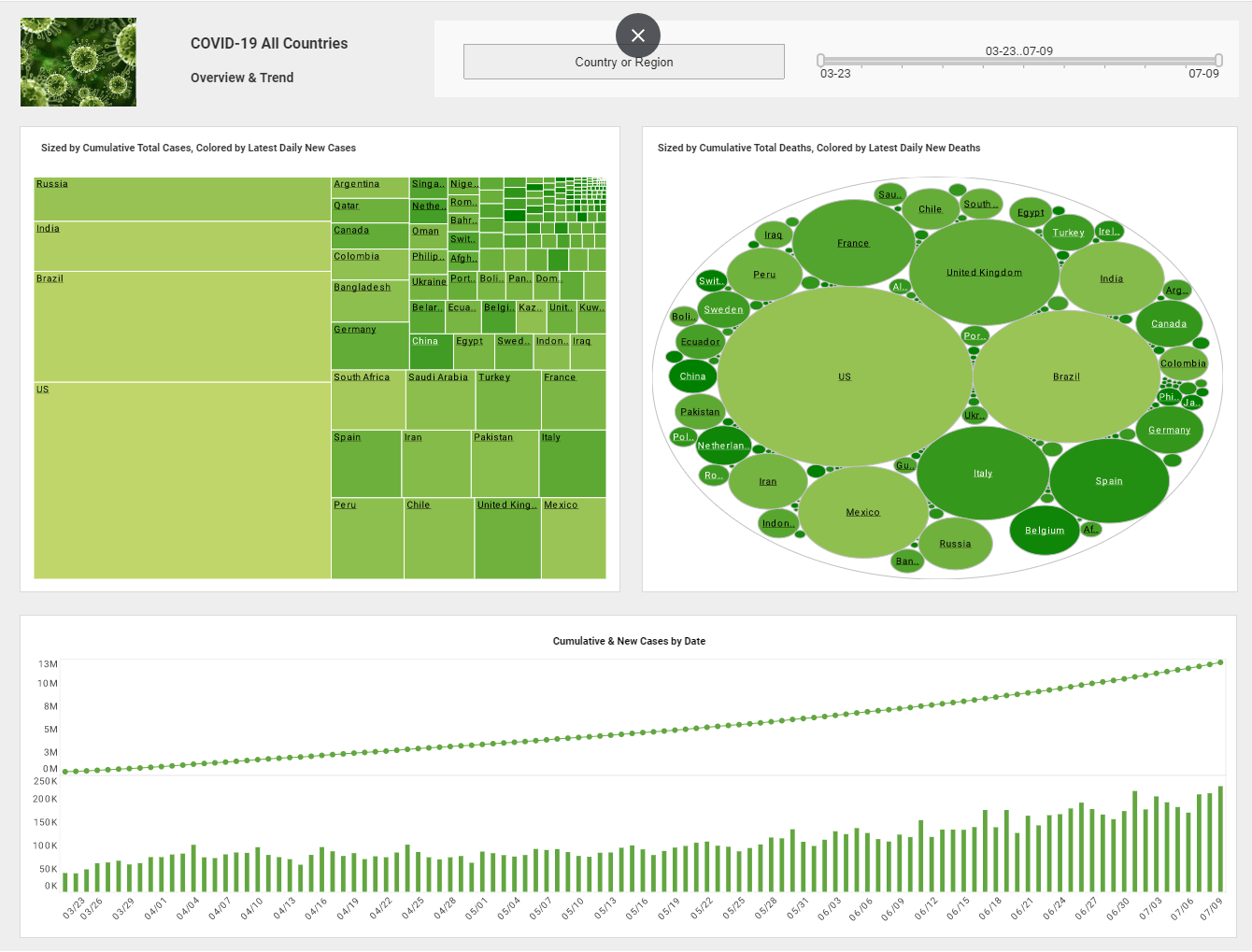

Mark: So this is the label we’re giving to the technology that we’re introducing in this release to deliver performance improvements and establish a path for scaling up for accessing massive databases while we’re providing service to a large number of simultaneous users, and Cassie’s going to add a little more description to this for you.

Cassie: Thanks Mark. Traditionally, our software has always defaulted to accessing your operational data sources directly. We really espoused the idea of traditional BI, where you’re required to build a data warehouse. The only problem with that, and it only comes up some of the time, is that operational data sources tend to be attuned more for data entry and writing.

For instance, a CRM system where information is added into the system. So they’re not performance oriented as much for reporting or the reading of that data especially for analysis - interactive analysis and visualization of that data.

Some of our customers have built reporting databases where they’re simply replicating the data over. Some even go so far as building a data warehouse, although our software doesn’t require that. So, we introduced the concept of materialized views a few versions ago and with this latest release we’ve really reengineered and re-architected it all so it’s much higher performance.

The core of it is based on techniques from column based databases or data stores that are much more efficient for interactive analysis of large amount of data and if you have really large volume of data we’ve also built in distributed technology that’s largely based on the map reduce concept where we can parallelize a lot of calculations on extremely large data sets making use of a cluster or private cloud of commodity hardware instead of forcing you to invest in a very large, high performing machine.

So with those two technologies, the new data grid cache feature really extends this embedded materialized view concept even further and turns the whole process of data warehouse development on its head; where you would first build out the reports in viewsheets and then analyze the contents of the viewsheet to see how you’re using the data, now we can build an appropriate column based data store that will make that particular dashboard very high performing.

Explaining MapReduce

MapReduce is a powerful programming model and processing technique that enables large-scale data processing across distributed systems. Originally developed by Google, it has become a cornerstone of big data management, particularly in environments where vast amounts of data must be processed efficiently. In the realm of data management, MapReduce offers a robust framework for processing and generating large datasets by leveraging parallel computing. This article delves into the mechanics of MapReduce, its role in data management, and how it has transformed the way organizations handle and analyze big data.

Understanding MapReduce

MapReduce is fundamentally based on two key functions: Map and Reduce. These functions work together to process large datasets in a distributed manner. The Map function is responsible for breaking down a large task into smaller, manageable sub-tasks, while the Reduce function aggregates the results of these sub-tasks to produce a final output.

- Map Function:

- The Map function takes an input key-value pair and produces a set of intermediate key-value pairs. This step is often referred to as the mapping phase. The input data is typically divided into chunks, and each chunk is processed independently. The Map function is applied to each piece of data in parallel, making it highly scalable. For example, if the task is to count the frequency of words in a large collection of documents, the Map function would process each document, emit each word as a key, and associate it with the value 1.

- Shuffle and Sort:

- After the Map function has generated intermediate key-value pairs, the system automatically performs a shuffle and sort operation. This process groups all intermediate values associated with the same key together. In the word count example, all instances of the word "data" from different documents would be grouped together. This step is critical as it organizes the data in a way that allows the Reduce function to efficiently aggregate results.

- Reduce Function:

- The Reduce function takes the grouped intermediate key-value pairs produced by the shuffle and sort operation and processes them to generate the final output. The Reduce function iterates over each key and performs the necessary computation to produce a summarized result. In the word count example, the Reduce function would sum up all the values associated with each word, producing a final count for each word across all documents.

MapReduce in the Context of Data Management

In the realm of data management, MapReduce is particularly valuable for handling massive datasets that are too large to be processed on a single machine. By distributing the processing workload across a cluster of machines, MapReduce allows organizations to efficiently manage and analyze big data. Several key aspects of data management are significantly enhanced by MapReduce:

- Scalability:

- One of the primary advantages of MapReduce is its scalability. As data volumes grow, the ability to process data in parallel across multiple machines becomes crucial. MapReduce scales horizontally, meaning that adding more machines to a cluster can increase processing power and reduce the time required to process large datasets. This scalability is vital for organizations dealing with rapidly expanding data, such as social media platforms, e-commerce sites, and financial institutions.

- Fault Tolerance:

- In a distributed computing environment, hardware failures are inevitable. MapReduce is designed with fault tolerance in mind. If a machine in the cluster fails during the execution of a MapReduce job, the system automatically reassigns the task to another machine. This redundancy ensures that the job can be completed even in the face of hardware failures, making MapReduce a reliable choice for critical data processing tasks.

- Efficiency in Data Processing:

- MapReduce is optimized for processing large datasets by breaking down complex tasks into smaller, parallelizable components. This approach not only speeds up data processing but also makes it more efficient. By processing data locally on each machine, MapReduce minimizes the need for data transfer across the network, which can be a significant bottleneck in distributed systems. This efficiency is particularly important for organizations that need to process large volumes of data in real-time or near real-time.

- Flexibility:

- The MapReduce programming model is flexible and can be applied to a wide range of data processing tasks. Whether the goal is to perform large-scale data aggregation, filter data based on specific criteria, or analyze patterns across multiple datasets, MapReduce can be adapted to meet the needs of various use cases. This flexibility has made it a popular choice for organizations that need a versatile tool for managing diverse data processing workloads.

- Integration with Big Data Ecosystems:

- MapReduce is often integrated with other tools and frameworks within big data ecosystems. For example, Apache Hadoop, an open-source framework for distributed storage and processing of large datasets, uses MapReduce as its core processing engine. Hadoop's ecosystem includes other tools like HDFS (Hadoop Distributed File System) for data storage, Hive for data warehousing, and Pig for data analysis, all of which can leverage MapReduce for processing tasks. This integration makes MapReduce an essential component of many big data architectures.

Real-World Applications of MapReduce

MapReduce has been widely adopted across various industries for its ability to process large datasets efficiently. Here are a few real-world applications where MapReduce has made a significant impact:

- Search Engines:

- Google, the original developer of MapReduce, used it to index the vast amounts of data on the web. The MapReduce framework allowed Google to efficiently crawl, index, and rank billions of web pages, enabling fast and accurate search results. The ability to process and analyze massive datasets in parallel was a key factor in Google's ability to scale its search engine to handle global traffic.

- Data Warehousing:

- Large enterprises often use MapReduce for data warehousing tasks, such as ETL (Extract, Transform, Load) processes. MapReduce can process and transform raw data from various sources into structured formats that are suitable for analysis and reporting. This capability is crucial for businesses that need to integrate data from multiple systems and generate insights for decision-making.

- Social Media Analytics:

- Social media platforms generate vast amounts of user-generated content daily. MapReduce is used to analyze this data, identifying trends, sentiment, and user behavior. For example, Facebook has used MapReduce to process and analyze petabytes of user interaction data to improve its recommendation algorithms and target advertising.

- Financial Services:

- In the financial industry, MapReduce is used to analyze large datasets related to transactions, market trends, and customer behavior. This analysis helps financial institutions detect fraudulent activity, assess credit risk, and optimize trading strategies. The ability to process data in parallel allows financial institutions to handle the high volumes of data generated in today's digital economy.

- Genomic Research:

- In the field of genomics, MapReduce has been applied to process and analyze vast amounts of genetic data. Researchers use MapReduce to sequence DNA, compare genetic variations, and identify patterns that can lead to breakthroughs in understanding diseases and developing new treatments.

Challenges and Considerations

While MapReduce is a powerful tool for data management, it is not without its challenges. One of the primary limitations of MapReduce is its complexity. Writing MapReduce programs requires a good understanding of distributed computing and the specific syntax of the framework being used, such as Hadoop's implementation of MapReduce. This learning curve can be a barrier for organizations without the necessary technical expertise.

Another challenge is that MapReduce is not always the most efficient solution for all types of data processing tasks. For example, iterative processing tasks, where the output of one stage is fed back into the system as input for another stage, can be inefficient in a MapReduce framework. In such cases, other processing models like Apache Spark, which supports in-memory processing, might be more suitable.

Lastly, while MapReduce is highly scalable, it can be resource-intensive. Running large MapReduce jobs requires significant computational resources, which can be costly. Organizations need to carefully consider the cost-benefit trade-off when deciding whether to use MapReduce for a particular task.