Top 16 Software Tools for Data Analysts 2025

Contents

Estimated Reading Time: 30 minutes

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |

The growing market demand for data interpretation has led to the growth of high-level data analysis. This has provided opportunities for data professionals in the form of data analysis tools. Analysts and data experts now use tools and software to perform various analysis tasks. Analysts need tools that will guarantee high performance, while delivering the most accurate results in various tasks, including preparing data, generating predictions, executing algorithms, and automating reporting processes.

The software should also have the capability to conduct standard tasks such as reporting on the findings and providing visuals which represent the results. There is a plethora of different tools available in the industry that analysts can use to carry out their analytical work. Some tools may require the analysts to pay for a license while others are free and open-source. Analysts should choose wisely to ensure that the chosen tool meets their analytical needs.

This article will present a list of 16 popular analysis tools, along with highlights on their most important characteristics, and an example of each type. To begin, we will provide key takeaways, along with a basic definition and introduction to what is contained in this article.

Key Takeaways and Guidance

This article presents a definition of data analyst tools and describes different categories along with an example for each category. It also presents an overview of the tools that a data analyst may choose. The information provided below is useful in assisting analysts to make the best choice of one or more tools that fit their unique analytical process, regardless of their level of experience in the industry. While presenting the different tools, a focus is kept on diversity in terms of the technical skills needed to use different tools.

For example, technically skilled analysts can opt for versatile applications such as Python, MySQL Workbench, or R-Studio. In this article, we present data analyst tools that can be used by both academic researchers and professional data analysts. The article also presents an overview of the different analyst tools that beginners can start with to work their way to becoming professionals in this field.

|

Read why choosing InetSoft's cloud-flexible BI provides advantages over other BI options. |

Data Analyst Tools Definition

Data analyst tools refer to software and applications used by professional data analysts in the design and execution of the analysis process. These tools are useful to organizations because they make it possible for decision-makers to make informed decisions. Data analyst tools are useful in reducing costs and maximizing profits for organizations. Analysts have to make decisions on which tools will be appropriate to perform data analysis for their organization. To assist analysts in making this decision, this article presents a list of the best tools in the industry considering the different features and focus of different applications. The list will include examples in each category of applications.

Tools Used by Data Analysts

To understand the capability of and utilize the wide range of software and tools available in the market, this page will discuss the most important and well-known tools that one may need to carry out data analysis professionally.

These tools are effective in simplifying complex analytic tasks, thus making the professional analyst's work easier. With these tools, the analysts can spend more time understanding the outcomes of the analysis process rather than carrying out the analysis. So here are the different types of data analyst tools.

1. Business Intelligence Tools

To understand the capability of and utilize the wide range of software and tools available in the market, this page will discuss the most important and well-known tools that one may need to carry out data analysis professionally. These tools are effective in simplifying complex analytic tasks, thus making the professional analyst's work easier. With these tools, the analysts can spend more time understanding the outcomes of the analysis process rather than carrying out the analysis. So here are the different types of data analyst tools.

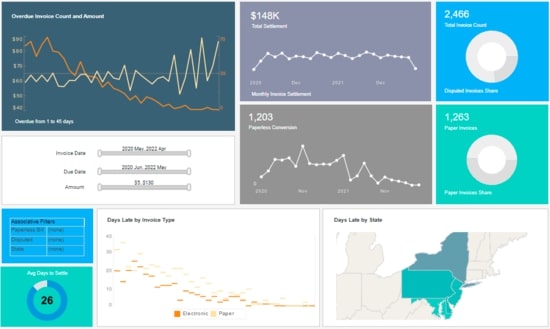

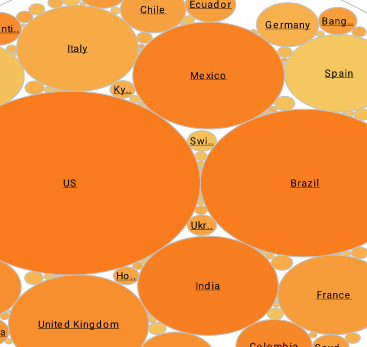

Business intelligence is an important aspect of data analytics, and InetSoft is a good example of a BI tool that covers a large portion of requirements for users at both the beginner and advanced levels. InetSoft is an all-in-one tool that is focused on facilitating the analysis process entirely. InetSoft is designed with the option of being used in cloud computing and Software-as-a-Service, or as an on-premise solution. This supports the distribution of data between third party sources and the product itself.

|

Read how InetSoft was rated as a top BI vendor in G2 Crowd's user survey-based index. |

2. Statistical Analysis Tools

Statistical analysis tools are tools used for data analysis that are designed for a more technical approach. These tools use computational and statistical techniques to manipulate, analyze, and generate insights from raw data. The insights generated can be useful in making business decisions. Different programming languages are used with statistical analysis tools to simplify the work of the data scientists. There are various programming languages used in statistical analysis. When considering data analysis and modeling, different scenarios should be given special attention.

In this section, we will consider one popular tool used for statistical analysis: RStudio, which uses the R programming language. There are other languages used in statistical analysis; however, for this article, we will consider R because of its popularity and wide adoption among data analysts.

RStudio Key Features

- More than 10,000 packages and extensions that can be used to conduct different types of data analysis

- Allows users to model and analyze statistical data, and test hypotheses; for example, t-tests and analyze variables

- Supported by an active community of statisticians, scientists, and researchers who contribute by giving feedback on how the application can be improved

R is a language that was designed by statisticians in 1995 and it is widely used for statistical analysis. RStudio is a popular tool used to perform statistical analysis.

The RStudio application is open-source and can run on different operating systems, including MacOS and Windows It can perform data cleaning and reduction, as well as analysis, and provides its report output with R markdown.

RStudio is a versatile tool which is used by both academic researchers and professional data analysts. It has over 10,000 extensions and packages that statisticians can explore. The packages and extensions available in RStudio are essential in assisting statisticians in the performance of different operations, such as regression and cluster analysis.

RStudio is easy to understand for beginners who do not have programming skills. This application only requires a single command to perform complex mathematical operations. Some of the graphical libraries utilized in RStudio include ggplot and plotly. The libraries make RStudio a unique tool preferred by many analysts.

Initially, students and academic researchers were the main users of R. However, it has gained popularity across different industries and it is now used commercially by tech giant companies such as Twitter, Google, Airbnb, and Facebook, among others. This popularity has been a result of increased adoption by researchers, statisticians, and scientists. In addition, its active community shares ideas on innovative technologies and assists beginners.

|

View a 2-minute demonstration of InetSoft's easy, agile, and robust BI software. |

3. General Purpose Programming Languages

General-purpose languages are used in the development of software and applications that can run on different domains and platforms. Programmers use formal syntax to create programs using these languages. General purpose programming languages are also known as text-based programs because when developing a program, the programmers have to write down the instructions or code for the program.

Some examples include Java, C#, PHP, C/C++, Python, and Ruby. Other declarative languages such as SQL, Prolog, and languages used to solve domain-specific problems are not included in this category. Python as an example of a widely used programming language for data analysis.

Python Key Features

Python is an open-source application used to solve data analysis problems. It uses simple coding, syntax, and processes, making it an easy language to learn. Python codes can be integrated easily with other programming languages such as PHP, C, C++, C#, Java and others. It supports advanced processes through text mining and artificial intelligence using machine learning.

Python is very accessible to code, more so than other well-known languages such as Java. The syntax in this programming language is easy to learn, which makes it popular among individuals who do not have programming experience. It is also popular among beginners because it is open-source and has a lot of community participation, making it easy for beginners to learn new trends in the market.

Python performs data crawling, modeling, cleaning, and construction analysis algorithms, depending on the business situation under consideration. Python has many qualities that make it unique and popular among data analysts, such as its user-friendliness. In this programming language, developers do not have to handle the memory or remember the system architecture. Python language does not depend on the local processor because it is a high-level language.

Another significant benefit is portability, or its ability to be run on different operating systems. Programmers can write and run Python code on different machines without the need to make any changes. Python has many packages and libraries that make it useful in different industries.

Currently, Python is used by various popular companies such as Dropbox, Reddit, and Netflix. Python has features such as text mining and machine learning that are useful when conducting advanced analysis. Such capabilities make Python a popular tool in data analysis.

|

View the gallery of examples of dashboards and visualizations. |

4. SQL Consoles

This list would not be complete without including SQL consoles. SQL is used to query and manage relational databases.. It is a popular general-purpose programming language in the data science community that is preferred by most data analysts and is used in different data scenarios and business cases. SQL is critical in the success of many businesses who use relational databases to store their data.

Learning SQL is essential for data analysts and provides them with a competitive advantage. There are different database management systems that are used to manage SQL databases, such as MS SQL, Oracle, MySQL, and PostgreSQL. Professional analysts should make sure they are conversant with SQL consoles because it is critical for them in their line of work. MySQL will be discussed in this section because it is a popular database management system.

MySQL Workbench Key Features

MySQL Workbench is a visual tool that can perform various tasks, including data modeling, database administration, and development of SQL instructions, among other functions. It has an object browser that makes it possible for users to access the schema and objects of the database. The Workbench editor has some features that make it user friendly, such as syntax highlighting use of snippets, and allowing the users to view execution history. Analysts use MySQL Workbench to model, manage, and visually design databases. It is also used to administer the MySQL environment and optimize SQL queries. MySQL Workbench improves the performance of MySQL applications through the use of different tools.

It allows the users to perform different tasks; for example, it makes it possible for users to access and view different objects in a database, and allows users to write queries that can make changes, update, or delete certain objects in the database. MySQL is also useful when users need to migrate the database from one server to another.

5. Predictive Analytics Tools

Predictive analytics refer to advanced techniques that may be used by analysts to predict future trends. These techniques, which rely on artificial intelligence and predictive modeling to forecast future trends, have been increasing in popularity in recent years. The introduction of smart solutions has simplified the predictive analytics process for analysts. It is important to remember that some BI tools that have been outlined earlier in this list provide built-in predictive analytic solutions that are easy to use. But this section is dedicated to discussion of standalone, advanced predictive analytics tools that are used by different organizations for various purposes.

Some of the uses of these tools include detecting fraud using artificial intelligence pattern detection capabilities. Another is analyzing big data collected from social media activities. The predictions generated can then be used to select targeted advertisements based on the users' activities. For instance, users will be shown ads based on the products or items they have previously searched.

|

Learn the advantages of InetSoft's small footprint BI platform. |

SAS Forecasting Key Features

- Conducts forecasts to determine possible future trends

- Can perform forecasting in a hierarchical manner

- Scalability and modeling by combining multiple models and developing an ensemble

- Availability of model repository that is not limited. For instance, the model repository may include causal methods and time series ARIMAX and ARIMA.

SAS Forecasting for Desktop has continued to become popular among data analysts. Currently, it is a prominent application with advanced features that are useful in the process of data analysis. The software offers different forecasting methods such as event modeling, scenario planning, hierarchical reconciliation, and what-if analysis. The features in the software cover 7 main areas of forecasting processes including ones that have already been mentioned. This application takes inputs from the users as variables and generates predictions of future trends. The outcomes generated with SAS Forecasting for Desktop can be used to enhance the performance of a business.

SAS Forecasting for Desktop can produce a high number of forecasts through an automated process. It accomplishes this through the use of SAS Forecast Server and Visual Forecasting solutions. The solution has been in the market for a long time, and analysts, researchers, and professionals recognize the application as an industry leader, making it a popular option among users.

6. Data Modeling Tools

Data modeling tools are important in the field of data analysis and thus have to be included in the list of data analysis tools for analysts. Data modeling involves the use of diagrams to create business systems. It aims at representing the flow of data and indicating the relationship and connection between the different stages.

Firms utilize data modeling tools to have a clear visual representation of the nature of the information that affects the firm and understand how different datasets relate. Data analysts are critical in the process of creating data models and interpreting them.

The skills needed to identify, analyze, and classify the changes in the information stored in databases contribute to the understanding of how information and data flow in a company. This section will present Erwin DM as an example of a tool for developing models and presenting data asset designs.

Erwin Data Modeler Key Features

- Generates data models automatically, leading to improvement in efficiency during the analytical process

- Uses one interface when dealing with different types of data at different locations

- Modeler offers 7 different versions of the solutions for users based on the needs of the specific business

Erwin Data Modeler can locate, visualize, design, and create quality datasets for an organization. This data modeler tool is useful in reducing complexities.

It helps data analysts understand their data sources, making it more possible for businesses to achieve their goals. It supports the automatic generation of models and designs, improving effectiveness and productivity during the analysis process. It also has the capability to use different models forms to meet the users' requirements. The Erwin DM can carry out analysis using structured and unstructured data whether located on a local machine or remotely in a cloud, supporting diverse business requirements using various features in its application.

|

Read the top 10 reasons for selecting InetSoft as your BI partner. |

7. ETL Tools

Many organizations around the world use ETL tools. Business growth prompts the need to draw out, upload, and transform data into a master database for the analysis and development of queries. Examples of ETL tools include batch, real-time, and cloud-based ETL. They contain unique features and specifications to suit various business needs. ETL tools are utilized by analysts in the technical processes of data management within an organization, for instance, Talend

Talend Key Features

- Collects and transforms data once it has been prepared, integrated, and added into the cloud pipeline design

- Identifies and resolves any issues that could influence the quality of the data under analysis using its data governance feature

- Promotes data sharing through comprehensive deliveries, such as APIs

Talend is a tool utilized by analysts around the world for data management. It facilitates data integration, storage in the cloud, and data maintenance. It is an ETL tool based on Java. It can process a large number of data records and provides wide-ranging solutions for different data projects. Some of the features of Talend include integration of data, preparation of data, design of cloud pipeline, and stitch data loading, meeting several data administration needs for different companies.

Talend facilitates consolidation and analysis of large sets of data, to obtain actionable intelligence. Aside from data collection and transformation, Talend also provides a solution for data protection, supporting the creation of data centers that are implemented on a cloud platform and can be managed remotely. Talend's data fabric ensures data quality, integrity, and governance by assessing and cleaning the data throughout its lifecycle, thereby ensuring that healthy data is analyzed.

Moreover, it contains a data-sharing feature within its portfolio. API integration allows internal and external sharing of clean data among an organization's shareholders. Talend drives data consolidation and integration to promote actionable intelligence.

8. Automation Tools

Automation techniques ensure efficiency when analyzing different types of data.. Automation in data analytics consists of using processes and systems to complete analytic tasks with limited human interference. Automation has improved the process of data analysis, especially for companies that deal with big data. The complexity of the automation tools employed determines the purpose that they will achieve. For example, simple tools can fit data into pre-existing models, while advanced tools can conduct exploration of data for analysis and develop a feedback channel for review of the findings. Generally, automation tools have enhanced business processes in different ways. Jenkins server will be explored in this section to determine the impact of automation tools on businesses.

|

Click to read InetSoft's client reviews and comments to learn why they chose InetSoft. |

Jenkins Key Features

- An extensible server that can be operated as a simple continuous integration (CI) server or can automatically deliver programmed projects

- Can be easily configured using its web interface

- Can distribute work through different machines

- Contains several plugins that integrate with all tools within their toolchain

Jenkins is a self-contained server that facilitates the development, testing, and deployment of projects automatically. It is an open source tool that enjoys extensive community support. It contains several plugins that integrate with all tools within its toolchain.

Jenkins can be extended through the formulation of its plugins, thereby increasing its functionalities in the automation of data analysis processes. It also distributes completed projects across various machines and platforms faster. The availability of hundreds of interconnected plugins and security features has facilitated Jenkins' widespread adoption around the world.

9. Unified Data Analytics Engines

Unified data analytics engines are a suitable solution for organizations that generate massive datasets. These organizations need big data management. Organizations need tools to control their robust data environment, thereby enabling them to arrive at an informed decision considering the available data.

AI and machine learning are crucial in the management of big data. An example of a big data analytic tool is Apache Spark. It supports large-scale data processing within a large ecosystem.

|

Read how InetSoft was rated #1 for user adoption in G2 Crowd's user survey-based index. |

Apache Spark Key Features

- High performance in the processing of large sets of data

- A large system that consists of different features, such as graph computation and artificial intelligence

- A large number of operators that act on sets of big data

Apache Spark is used at organizations such as Netflix, eBay, and Yahoo. These companies have employed Apache Spark in processing petabytes of data, thus proving that the tool is suitable for the management of big data. It employs in-memory processing to enhance machine learning, and supports the re-use of code in different workloads, enabling it to execute queries against big data quickly. Moreover, it facilitates the development of APIs in various programming languages, such as R and Java. Apache Spark is widely used for conducting analytic queries on big data because it is user friendly. Developers can utilize multiple languages to build their applications. It can also run multiple workloads, which include machine learning, real-time analytics, and graph processing, thus enabling workloads to run smoothly.

Apache Spark is a unified engine whose framework contains specifications for completing different tasks. Apache Spark core is the foundation of the tool. It supports all the functionalities of Spark, such as graph processing and machine leading. Spark's capability to process big data faster supports its application in the processing of big data workloads that are produced in different industries, such as the manufacturing, healthcare, and the financial services sectors. Apache Spark can also be deployed in cloud. Apache Spark has unified these features to make the processing of big data workloads faster and efficient. Apache Spark is a popular tool used by data analysts to manage big data.

10. Spreadsheet Applications

Spreadsheets are popular forms of recording and analyzing repetitive data. They are utilized by organizations that do not manage large databases. Additionally, the utilization of spreadsheets does not require technical experience, hence they can be used for data analysis by employees with minimal skills. The most popular spreadsheet application is Excel.

Excel Key Features

- Part of the Microsoft Office family, which promotes its use with other Microsoft features

- Can be employed in recording and analyzing repetitive data

- Has pivot tables that can be used to undertake simple calculations, such as addition, subtraction, average, minimum, and maximum and develop equations through designated columns and rows

Excel is a traditional analysis tool that continues to be used extensively around the world. Many people have utilized Excel in their careers. It is a multipurpose tool for data analysis that can be utilized by manipulating rows and columns. Once the data has been analyzed, the findings can be exported to the desired recipients, using Excel as a reporting tool. However, Excel does not have an automation feature, unlike other tools that have been discussed in this article. Excel operates as an accounting worksheet that can be completed electronically.

Excel can complete different tasks, which include manipulating, calculating, and evaluating quantitative data. It can perform simple and repetitive calculations, such as addition, subtraction, average, minimum, and maximum. It also builds equations using different approaches. These features have earned Excel its place as a traditional data management tool.

11. Industry-Specific Analytics Tools

The majority of the tools discussed within this article can be utilized for daily analysts' workflow in different industries. However, some tools are suitable for use in specific organizations. It is essential that industry-specific solutions are also explored in this list. Qualtrics is utilized by several industries around the globe. The software will be explored because it contains industry-specific features, which focus on market research.

Qualtrics Key Features

- Facilitates exploration of experiences, such as customer, brand, employee, and product experiences, that are achieved through undertaking surveys and generating reports based on the feedback received

- The company provides supplementary research services through their internal experts

- The company provides supplementary research services through their internal experts

Qualtrics is a data analysis software that provides advanced data management. The software is being utilized by market research organizations around the world. It offers four product pillars that include, brand, customer experience, employee, and product experience. Qualtrics can be used to undertake surveys and generate reports that would enhance these experiences. It also facilitates the development of a wide array of customer feedback that ranges from multiple-choice questions to charts such as heat maps, and the translation of survey questions into different languages.

These features provide additional research information and enhance the feedback received. Qualtrics also has different features that make it possible for users to access resources from more than one location. Centralized access facilitates the quick review of feedback and strategies to enhance shareholders' experience.

Automation of tasks using technology has gradually become a significant part of running a business. Qualtrics contains a drag-and-drop feature, which has been integrated into organizations' systems that already utilize the software. Examples of these systems include messaging, ticketing, and CRM. This feature enables users to automatically deliver notifications to the desired recipients. It also makes it possible for users to analyze customer and employee experiences. Qualtrics has a directory feature that interconnects various forms of data that is collected in the form of SMS, voice and video recordings. Qualtrics iQ tool facilitates the analysis of unstructured data, thereby enabling users to use predictive analytics to build detailed journeys for their customers.

|

View a 2-minute demonstration of InetSoft's easy, agile, and robust BI software. |

12. Data Science Platforms

Data science provides several software solutions that have been discussed in this list. However, it deserves to be placed in an exceptional category because it has become the most essential skill that is sought in this decade. Data science platforms contain advanced analytics models that simply the data analysis process, enabling users to obtain in depth insights on data science. The value of data science platforms will be further underscored through the review of RapidMiner, a leading software that combines simplified yet deep data analysis mechanisms.

RapidMiner Key Features

- Relies on artificial intelligence and makes use of a large number of algorithms

- Supports prescriptive and descriptive data analysis and supports integration with Python and RStudio

- Supports connection with different types of databases

- Can be integrated with R and Python and supports connections with databases, such as Oracle

|

View the gallery of examples of dashboards and visualizations. |

Academic and professional analysts use RapidMiner for their work. It has been employed by more than 40,000 institutions that largely depend on analytics to execute their operations. The tool can be used to prepare data, model operations, and implement machine learning. RapidMiner has unified the whole data science cycle. The software has been built on three automated data science platforms and five core platforms that facilitate the design and deployment of analytic processes.

It also contains data exploration features, such as descriptive statistics and visualizations that facilitate access to the desired information. It has a predictive analytics feature that allows users to model a wide range of tasks. Academic and professional analysts use RapidMiner for their work. It has been employed by more than 40,000 institutions that largely depend on analytics to execute their operations. The tool can be used to prepare data, model operations, and implement machine learning. RapidMiner has unified the whole data science cycle.

The software has been built on three automated data science platforms and five core platforms that facilitate the design and deployment of analytic processes. It also contains data exploration features, such as descriptive statistics and visualizations that facilitate access to the desired information. It has a predictive analytics feature that allows users to model a wide range of tasks.

13. Data Cleansing Platforms

When volumes of data are being generated, the possibility of errors is increased. The formulation of data cleansing technologies facilitate the identification and avoidance of errors that could affect the process of data analysis.

These technologies allow analysts to identify inconsistencies within a set of data, including duplications, and other errors, and remove them to obtain a healthy dataset that can be analyzed to achieve reliable results.

Prior to the development of data cleaning tools, analysts used to cleanse data manually. However, the practice was unreliable because the process was prone to error. Data cleansing tools enhance efficiency during the data analysis process, thereby making analysts more productive, as they can complete the process quickly and undertake other tasks. An example of a data cleansing software is OpenRefine.

|

Read the top 10 reasons for selecting InetSoft as your BI partner. |

OpenRefine Key Features

- Facilitates exploration of large sets of data easily

- Cleans data using features such as clusters and facets and transforms the final data in the desired format

- Has the capacity to reconcile and match data with various web services through a large list of plugins and extensions

OpenRefine, which was previously known as Google Refine, is a powerful data cleansing tool that can be employed when working with "messy" data. The tool is an open-source Java-based application that facilitates the cleansing of large sets of data. It facilitates cleansing and alteration of the format of data that is available for analysis.

OpenRefine can analyze data in different formats and uses a layout similar to spreadsheets. Hence, analysts can use OpenRefine by uploading raw data from multiple platforms. The tool then cleanses the data to obtain a healthy dataset that can produce reliable results following its analysis.

OpenRefine guarantees the privacy of users' data unless they authorize access to other parties. It runs on a server that is present in the users' computer and uses the web browser installed therein for interactions, hence nothing leaves the computer unless it has been initiated by the owner.

The software is available in over fifteen languages to improve its ease of utilization by different analysts. OpenRefine is a suitable tool that can be employed by data analysts when they are working with large data sets that require cleansing to enhance their reliability.

Read the Style Intelligence datasheet for a detailed explanation of the platform's capabilities and features. |

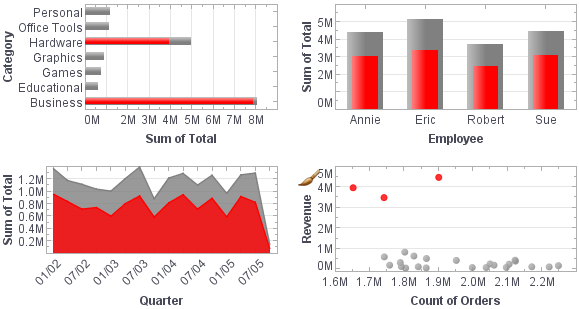

14. Data Visualization Tools and Platforms

Data visualization is an indispensable element of data analytic tools with which analysts develop visual representations of their findings obtained following data analysis. Professional data visualization tools differ from simple visualization tools. The advanced visualization tools have been integrated through BI tools and are available in free and paid charting libraries.

A broad review of data visualization indicates that PowerPoint and Excel also provide visualization features. However, they do not meet the radical needs of data analysts who usually utilize the specialized data visualizations in BI tools as well as charting libraries. An example of a data visualization tool that will be explored in this section is Highcharts. It is a popular charting library that is available on the market.

HighCharts Key Features

- Freely available for non-commercial uses, such as by nonprofit organizations or school sites

- Designed for a technical based audience that mainly involves software developers

- A shared JavaScript engine that is used in developing charts for web and mobile platforms

- Contains a WebGL powered boost module that can condense millions of data points directly into a single browser

Highcharts is an interactive JavaScript charting solution for web pages. It has a diverse library that promotes interactive charts and the development of various projects that software developers undertake.

Information can be updated in real-time into the charting library or any pre-existing data that is in CSV and JSON format. Furthermore, Highcharts ensures that intelligent responses are generated depending on the specific charts and the specifications of the project under development, which ensure that accurate findings for each project are obtained. It places the other elements that are not part of the graph in their most optimal positions automatically. Highcharts support several types of charts. The different forms of charts enable analysts to present their findings in visualized form.

It is a module that is powered by WebGL that summarizes millions of different forms of data stored within a browser. Developers can either use a free license or choose to purchase a commercial one. Highcharts ensures that all users can download or edit their source codes that have been uploaded into the platform irrespective of the type of platform that they are using. Highcharts is developed to be used by analysts who have experience using JavaScript charting engines. Therefore, Highcharts is an excellent visualization tool that can be adopted by developers due to its easy to use API and flexibility that improves the charting process.

|

Learn the advantages of InetSoft's small footprint BI platform. |

15. Relational Databases

Relational databases allow users to store and access related data points. The databases use tables to represent data in a model that is not complicated. The records are identified using unique IDs which are considered key. BI tools retrieve data from relational databases and use it for analysis. These databases are easy to create and use.

A data analyst can perform database queries such as updating and deleting tables easily and promptly, making it possible to meet business needs. These databases support data integrity. In addition, they are reliable, making them popular in the BI industry.

MySQL Key Features

MySQL is an open-source relational DBMS. It can be used for both small and large applications. MySQL works with the OS to provide an environment for running relational databases in the computer hard disk. MySQL allows for network access and makes it possible for database developers to test database integrity.

Different database-driven applications use MySQL such as Joomla and WordPress. MySQL is also used in popular social media sites such Facebook.

|

View a 2-minute demonstration of InetSoft's easy, agile, and robust BI software. |

16. OLAP Tools

OLAP tools make it possible for data analysts to interactively analyze multidimensional data, taking into consideration different perspectives. This computing approach is effective in answering multi-dimensional analytical queries because it is fast and has smooth operation. OLAP is a unit of business intelligence and has three basic operations which include consolidation, rolling-up, slicing-down and dicing, and drilling-down. These tools utilize relational databases, data mining, and reporting features.

OLAP tools make it possible for data analysts to interactively analyze multidimensional data, taking into consideration different perspectives. This computing approach is effective in answering multi-dimensional analytical queries because it is fast and has smooth operation.

OLAP is a unit of business intelligence and has three basic operations which include consolidation, rolling-up, slicing-down and dicing, and drilling-down. These tools utilize relational databases, data mining, and reporting features.

XPlenty Key Features

- Allows users to analyze multidimensional analytical queries

- Can compute complex analytical and ad-hoc queries swiftly

- Front-end flexibility

- Useful in sales, forecasting analysis, and financial reporting

Xplenty can be used to connect to over 200 data sources and SaaS applications. For instance, it may be used with Google Analytics, HubSpot, and Salesforce. It allows integration of multiple sources as well as the integration of multiple destinations.

Xplenty is a user-friendly application that can be used by individuals without any experience in writing code. This tool has reliable support to assist users if they encounter challenges.

Best Tools for the Beginner Data Analyst

Modern solutions are useful to beginner data analysts to learn and carry out analytical work effectively. There are different BI analytical tools that they can use in order to gains skills fast and start working as analysts. When choosing analysts tools, beginner analysts should focus on tools that are open-source and used by a large community.

This makes it easy for beginners to get insights into any problem they might encounter while learning how to use the data analyst tool. Another important feature for a data analysis tool is the initial cost of the tool. Beginners need to choose tools that are open-source to ensure they save on expenses.

One tool that fits the description above is RStudio. It has an active community consisting of statisticians, scientists, and researchers who contribute by giving feedback on how the application can be improved. The community also provides assistance to beginners on the basic challenges that they face while learning to use the application.

A general-purpose programming language that may be used by beginner data analysts is Python. This language is completely accessible to code more than other well-known languages such as Java. The syntax in this programming language is easy to learn which makes it popular among individuals who do not have programming experience.

Read what InetSoft customers and partners have said about their selection of Style Scope for their solution for dashboard reporting. |

Python fits the needs of programmers with all levels of expertise. It can be easy to learn for users who do not have any programming skills. It is also useful for programmers who have experience using other programming languages such as Java, C#, and C/C++.

This programming language is thus appropriate for beginners because as they gain expertise, they will not need to change the programming language used. Other advantages for beginners are that Python is open-source and codes written in Python can be run on any platform.